JuiceFS vs. Lustre

Lustre is a parallel distributed file system designed for HPC environments. Initially developed under U.S. government funding by national laboratories to support large-scale scientific and engineering computations, it's now maintained primarily by DataDirect Networks (DDN). Lustre is widely adopted in supercomputing centers, research institutions, and enterprise HPC clusters.

JuiceFS is a cloud-native distributed file system that uses object storage to store data. The Community Edition supports integration with multiple metadata services and caters to diverse use cases. Its Enterprise Edition is specifically optimized for high-performance scenarios, with extensive applications in large-scale AI workloads including generative AI, autonomous driving, quantitative finance, and biotechnology.

This document provides a comprehensive comparison between Lustre and JuiceFS in terms of architecture, file distribution, and features.

Architecture comparison

Lustre

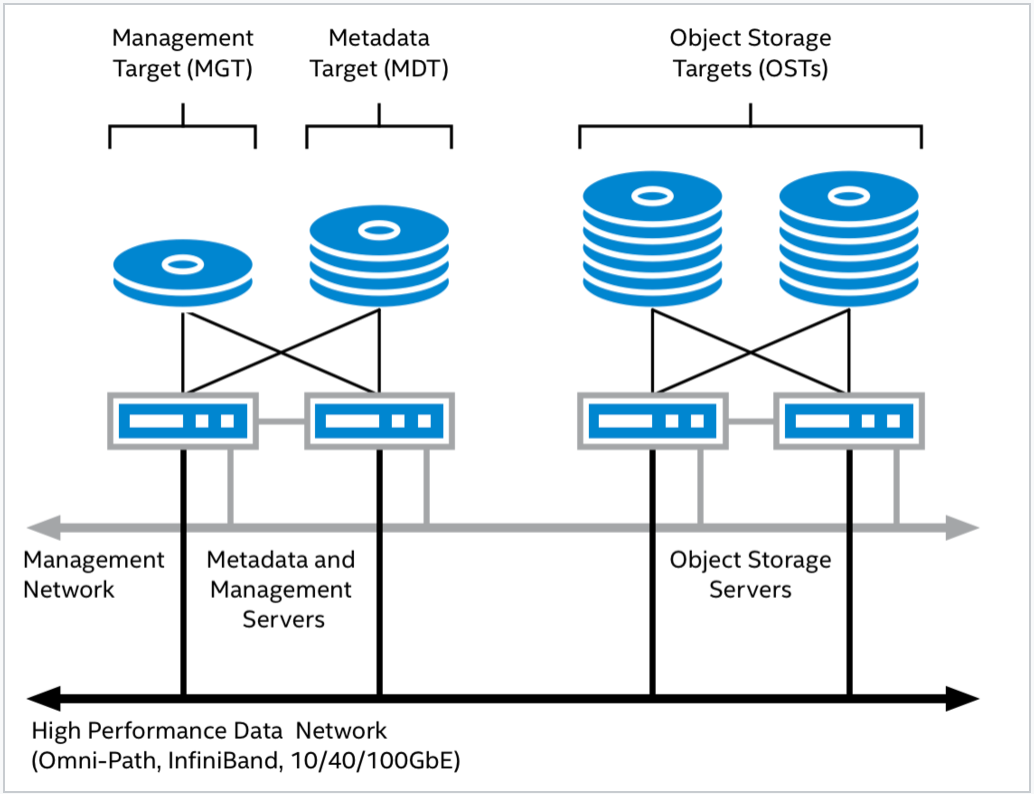

Lustre employs a traditional client-server architecture with the following core components:

- Metadata Servers (MDS): Handle namespace operations, such as file creation, deletion, and permission checks. Starting with version 2.4, Lustre introduced Distributed Namespace (DNE) to enable horizontal scaling by distributing different directories across multiple MDS within a single file system.

- Object Storage Servers (OSS): Manage actual data reads and writes, delivering high-performance large-scale read and write operations.

- Management Server (MGS): Acts as a global configuration registry, storing and distributing Lustre file system configuration information while remaining functionally independent of any specific Lustre instance.

- Clients: Provides applications with access to the Lustre file system through a standard POSIX file operations interface.

All components are interconnected via LNet, Lustre's dedicated networking protocol, forming a unified and high-performance file system architecture.

JuiceFS

JuiceFS uses a modular architecture that comprises three core components:

- Metadata engine: Stores file system metadata, including standard file system metadata and file data indexes. The Community Edition supports various databases including Redis, TiKV, MySQL, PostgreSQL, and FoundationDB. The Enterprise Edition uses a self-developed high-performance metadata service.

- Data storage: Primarily utilizes object storage services, which can be a public cloud object storage or an on-premises deployed object storage service. Supports over 30 types of object storage including AWS S3, Azure Blob, Google Cloud Storage, MinIO, and Ceph RADOS.

- Clients: Provides multiple access protocols, such as POSIX (FUSE), Hadoop SDK, CSI Driver, S3 Gateway, and Python SDK.

Architectural differences

Client implementation

Lustre employs a C-language, kernel-space client architecture, while JuiceFS adopts a Go-based, user-space approach through file system in Userspace (FUSE). Because the Lustre client runs in kernel space, there is no need to perform context switching between user mode and kernel mode or additional memory copying when accessing the MDS or OSS. This significantly reduces the performance overhead caused by system calls and has certain advantages in throughput and latency.

However, kernel-mode implementation also brings complexity to operation, maintenance, and debugging. Compared with user-mode development environments and debugging tools, kernel-mode tools have a higher threshold and are not easy for ordinary developers to master. JuiceFS's Go-based user-space implementation is easier to learn, maintain, and develop, with higher development efficiency and maintainability.

Storage module

Lustre requires one or more shared disks to store file data. This design stems from the fact that its early versions did not support file level redundancy (FLR). To achieve high availability (HA), when a node goes offline, its file system must be mounted to a peer node, otherwise the data chunks on the node will be inaccessible. Therefore, the reliability of the data depends on the high availability mechanism of the shared storage itself or the software RAID implementation configured by the user.

JuiceFS uses object storage as a data storage solution, thus enjoying several advantages brought by object storage, such as data reliability and consistency. Users can connect to specific storage systems according to their needs, including both object storage of mainstream cloud vendors and on-premises deployed object storage systems such as MinIO and Ceph RADOS. JuiceFS Community Edition provides local cache to cope with bandwidth requirements in AI scenarios, and the Enterprise Edition uses distributed cache to meet the needs of larger aggregate read bandwidth.

Metadata module

Lustre's MDS high availability relies on the coordinated implementation of software and hardware:

- Hardware level: The disks used by MDS need to be configured with RAID to avoid service unavailability due to single-point disk failure; the disks also need to have sharing capabilities so that when the primary node fails, the backup node can take over the disk resources.

- Software level: Use Pacemaker and Corosync to build a high-availability cluster to ensure that only one MDS instance is active at any time.

JuiceFS Community Edition provides a set of metadata operation interfaces that can access different metadata services, including databases like Redis, TiKV, MySQL, PostgreSQL, and FoundationDB. JuiceFS Enterprise Edition uses self-developed high-performance metadata services, which can balance data and hotspot operations according to load conditions to avoid the problem of metadata service hotspots being concentrated on certain nodes in large-scale training.

File distribution comparison

Lustre file distribution

Normal file layout (NFL)

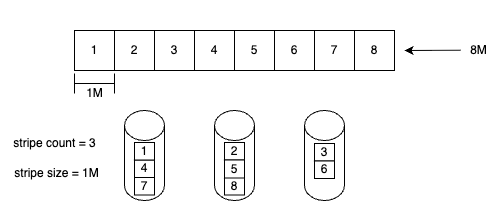

Lustre's initial file distribution mechanism segments files into multiple chunks distributed across object storage targets (OSTs) in a RAID 0-like striping pattern.

Key distribution parameters:

- stripe count: Determines the number of OSTs across which a file is striped. Higher values improve parallel access but increase scheduling and management overhead.

- stripe size: Defines the chunk size written to each OST before switching to the next OST. This determines the granularity of each chunk.

The figure above shows how a file with stripe count = 3 and stripe size = 1 MB is distributed across multiple OSTs. Each data block (stripe) is allocated to different OSTs sequentially via round-robin scheduling.

Key limitations include:

- Configuration parameters are immutable after file creation

- Can lead to ENOSPC (no space left) if any target OST runs out of space

- May result in storage imbalance over time

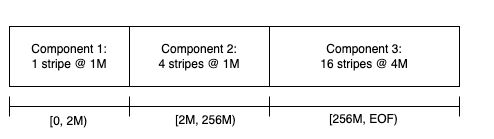

Progressive file layout (PFL)

To address the constraints of NFL, Lustre introduced progressive file layout (PFL), which allows defining different layout policies for different segments of the same file.

PFL provides advantages such as:

- Dynamic adaptation to file growth

- Mitigation of storage imbalance

- Improved space efficiency and flexibility

While PFL provides more adaptive layout strategies, Lustre integrates lazy initialization technology for more efficient dynamic resource scheduling to further address storage imbalance issues.

File level redundancy (FLR)

Lustre introduced file level redundancy to simplify HA architecture and enhance fault tolerance. FLR allows configuring one or more replicas for each file to achieve file-level redundancy protection. During write operations, data is initially written to only one replica, while the others are marked as STALE. The system ensures data consistency through a synchronization process called Resync.

JuiceFS file distribution

JuiceFS manages data blocks according to the rules of chunk, slice, and block. The size of each chunk is fixed at 64 MB, which optimizes data search and positioning. The actual file write operation is performed on slices. Each slice represents a continuous write process within a chunk, with length not exceeding 64 MB. A block (4 MB by default) is the basic unit of physical storage that implements the final storage of data in object storage and disk cache.

Slice in JuiceFS is a structure that is not common in other file systems. It records file write operations and persists them in object storage. Since object storage does not support in-place file modification, JuiceFS allows file content to be updated without rewriting the entire file by introducing the slice structure. When a file is modified, the system creates a new slice and updates the metadata after the slice is uploaded, pointing the file content to the new slice.

All slices of JuiceFS are written once, which reduces the reliance on the consistency of the underlying object storage and greatly simplifies the complexity of the cache system, making data consistency easier to ensure.

Feature comparison

| Features | Lustre | JuiceFS Community | JuiceFS Enterprise |

|---|---|---|---|

| Metadata | Distributed metadata service | Independent database service | Proprietary high-performance distributed metadata engine (horizontally scalable) |

| Metadata redundancy | Requires storage device support | Depends on the database used | Triple replication |

| Data storage | Self-managed | Uses object storage | Uses object storage |

| Data redundancy | Storage device or async replication | Provided by object storage | Provided by object storage |

| Data caching | Client local cache | Client local cache | Proprietary high-performance multi-replica distributed cache |

| Data encryption | Supported | Supported | Supported |

| Data compression | Supported | Supported | Supported |

| Quota management | Supported | Supported | Supported |

| Network protocol | Multiple protocols supported | TCP | TCP |

| Snapshots | File system-level snapshots | File-level snapshots | File-level snapshots |

| POSIX ACL | Supported | Supported | Supported |

| POSIX compliance | Compatible | Fully compatible | Fully compatible |

| CSI Driver | Unofficially supported | Supported | Supported |

| Client access | POSIX | POSIX (FUSE), Java SDK, S3 Gateway, Python SDK | POSIX (FUSE), Java SDK, S3 Gateway, Python SDK |

| Multi-cloud mirroring | Not supported | Not supported | Supported |

| Cross-cloud/region replication | Not supported | Not supported | Supported |

| Primary maintainer | DDN | Juicedata | Juicedata |

| Development language | C | Go | Go |

| License | GPL 2.0 | Apache License 2.0 | Commercial software |

Summary

Lustre is a high-performance parallel distributed file system where clients run in kernel space, interacting directly with the MDS and OSS. This architecture eliminates context switching between user and kernel space, enabling exceptional performance in high-bandwidth I/O scenarios when combined with high-performance storage devices.

However, running clients in kernel space increases operational complexity, requiring administrators to possess deep expertise in kernel debugging and underlying system troubleshooting. Additionally, Lustre's fixed-capacity storage approach and complex file distribution design demand meticulous planning and configuration for optimal resource utilization.

JuiceFS is a cloud-native, user-space distributed file system that tightly integrates with object storage and natively supports Kubernetes CSI, simplifying deployment and management in cloud environments. Users can achieve elastic scaling and highly available data services in containerized environments without needing to manage underlying storage hardware or complex scheduling mechanisms. For performance optimization, JuiceFS Enterprise Edition employs distributed caching to significantly reduce object storage access latency and improve file operation responsiveness.

From a cost perspective, Lustre requires high-performance dedicated storage hardware, resulting in substantial upfront investment and long-term maintenance expenses. In contrast, object storage offers greater cost efficiency, inherent scalability, and pay-as-you-go flexibility.

Both systems have their strengths: Lustre excels in traditional HPC environments requiring maximum performance, while JuiceFS provides better flexibility, easier management, and cost efficiency for cloud-native and AI workloads.