JuiceFS v1.0 Beta3 continues to enhance the metadata engine, adding etcd support, which can provide better availability and reliability than Redis. Also, Amazon MemoryDB for Redis and Redis Cluster supports are introduced in this release. For now, all metadata engines JuiceFS supported are as follows:

- Redis: supports standalone, sentinel and cluster modes, which is suitable for high performance usage with up to 100 million files. Asynchronous copying files based on AOF would cause data loss in a tiny chance, while Amazon MemoryDB for Redis provides high reliability since it works synchronously.

- Relational Database: includes MySQL, MariaDB and PostgreSQL, which is suitable for scenarios requiring high reliability more than high performance.

- TiKV: is designed for massive (more than 100 million) files scenarios which require both high reliability and high performance, while better maintenance capabilities are necessary.

- etcd: is designed for scenarios requiring high availability and high reliability with no more than 2 million files.

- Embedded Database: includes BadgerDB and SQLite. Could be used without the need of multi-machine concurrent accessing.

In addition to the enhancement of the metadata engine, the JuiceFS S3 gateway also provides advanced features such as multi-tenancy and permission settings, and supports non-UTF-8 encoded file names.

There are 22 community contributors contribute more than 240 commits. Thanks to every contributor for your hard work, and welcome the readers of this article to participate in the JuiceFS community.

Now let’s see the detailed changes that JuiceFS v1.0 Beta3 brings.

Metadata engine support for etcd

etcd is a data reliable distributed KV storage, which is widely used in Kubernetes. The data modifications made by etcd will be written to disk synchronously to avoid data loss. With Raft consensus algorithm, etcd can do metadata replication and failover to achieve high availability. It provides more data security and availability compared to asynchronous data persistence and replication provided by Redis.

However, the data capacity etcd supports is limited. According to the actual tests, etcd would be a good choice when storing less than 2 million files.

Similar to other metadata engines, use etcd:// as the data source name scheme. For example:

# Create file system

$ juicefs format etcd://localhost:2379/myjfs jfs-etcd

# Mount file system

$ juicefs mount -d etcd://localhost:2379/myjfs /mnt/jfsFor the performance of etcd, please refer to the "Metadata Engines Benchmark" document.

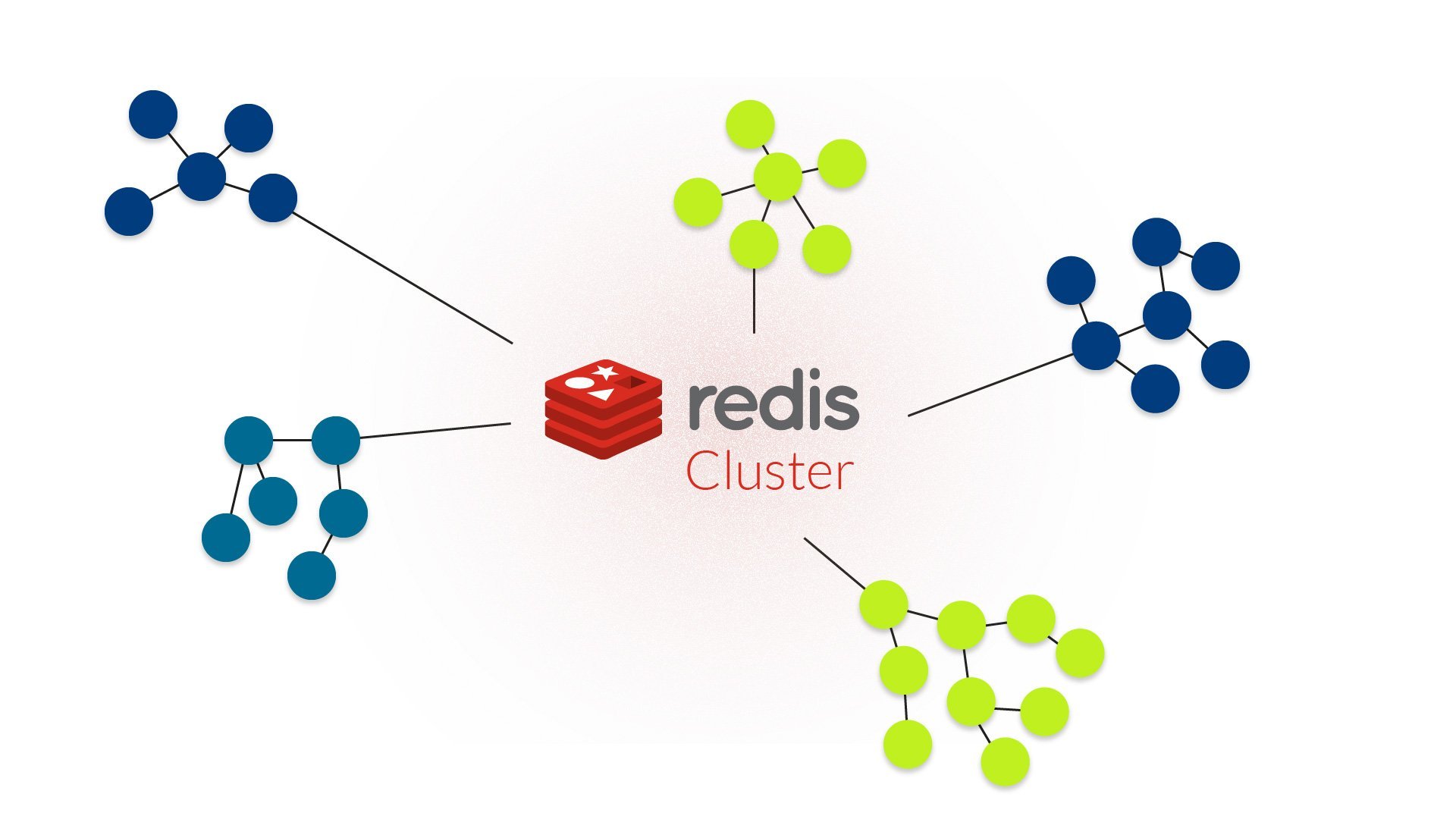

Redis Cluster and Amazon MemoryDB for Redis support

The strong consistency JuiceFS provided is based on the database transaction. However, Redis Cluster slices data into shards, but doesn’t support cross shards transactions. That makes the Redis Cluster unable to be used as the JuiceFS metadata engine.

JuiceFS v1.0 Beta3 uses a fixed prefix to allocate all data to a single Redis shard, thus ensuring that the Redis transaction function is not affected. This method cannot obtain the sharding ability of Redis in this way, but the data replication and election are easier to achieve (similar to sentinel mode). Moreover, the prefix method is quite similar to using multiple databases on a standalone mode, which provides nearly unlimited extensibility, and suitable for scenarios requiring many volumes with a small number of files.

The latest release of Amazon MemoryDB for Redis only provides cluster mode. Its synchronous data replication guarantees more reliability (along with higher write latency) than ElastiCache or self-hosted Redis, and is suitable for the scenarios which require high reliability and have reads much more than writes. Since all of the metadata will be stored into the same shard, it is recommended you to deploy your metadata engine in one shard and one replication (similar to master-replica mode) architecture, and then expand the capacity later by switching to a node with larger memory.

Delayed slice cleanup

If there are too many data slices when reading/writing files, JuiceFS will automatically trigger the slice compaction procedure, which merges slices to a large one and purge the old slices.

However, the old metadata may be used if we are migrating metadata via dump/load commands, or recovering from a metadata backup. If the slices have already been removed at these stages, a read failure will emerge.

Moreover, if your Redis has lost a small part of metadata, files may be broken when they use the removed slices.

To solve the problems above, we introduce the delayed slice cleanup feature in v1.0 Beta3. For file systems that have trash enabled, their compacted slices will not be deleted until they are expired in the trash.You can also use the command gc to do cleanup manually.

Enhanced sync command

JuiceFS v1.0 Beta3 brings a further improvement for the command sync to make it as similar as possible to the well-known tool rsync to lower the learning cost.

It changes usage of the file filtering options --include and --exclude to enable matching multiple filtering rules based on their command line order and wildcards, to keep them behaving the same as rsync. It is possible to filter nearly any set of files. For more details please refer to the document of rsync.

By default sync command copies the target file of a symlink. An new option --links is added to instruct to copy only the symlink itself.

Additionally, there is a new --limit option to limit the number of files.

Upgrade of S3 gateway

The S3 gateway of JuiceFS is implemented based on an earlier version of MinIO without some unnecessary features. The latest version of MinIO switches to AGPL, which JuiceFS cannot use for upgrading directly.

Now a reverse integration strategy is adopted, JuiceFS v1.0 Beta3 is reversely integrated to the latest MinIO which supports gateway, and is open-sourced as a whole under AGPL. This makes it possible to take advantage of the multi-tenancy, permission setting and many other advanced features of the latest version of MinIO. Please refer to S3 gateway document.

JuiceFS preserves a basic version of S3 gateway. For a full-featured version, please use this reverse integrated version. The source code is here.

Other new features

- Supports TLS when using TiKV metadata engine.

- When creating a file system, you can set a hash prefix to the data written to the object storage by specifying the option

--hash-prefix. Since many object storages have prefix-based QPS limitations or performance bottlenecks, setting hash prefix is a workaround to bypass these limitations to obtain a better performance. Please notice that the existing file systems that have already had data written into cannot change this option. - When mounting a file system, you can set the option

--heartbeatto specify the client heartbeat interval. This is useful in some scenarios that care about failover time. Please notice that the default heartbeat interval has been changed from 60s to 12s. - Supports Oracle Object Storage for storing data.

- Supports Java SDK metrics reporting to Graphite or other compatible systems.

- Supports non UTF-8 encoded file name for SQL metadata engines. For existing file systems, you need to upgrade your client first, and then modify the table schemas in your database.

Other changes

- When creating a new file system, JuiceFS will automatically write a placeholder object with UUID into the data storage, to keep it away from being used again by other file systems.

- The command

juicefs dumpwill automatically hide the secret key of the object storage to prevent confidentials from leaking. - Encrypt the saved credentials of the object storage to lower the security risk. You can change the encryption mode for existing file systems by using the command

juicefs config META-URL --encrypt-secret. Please notice that old version clients will not be able to mount the file system when enable credential encryption. - Improve the default metadata backup mechanism. You need to set the backup interval explicitly when you work with more than 1 million files.

- For Linux, when mounted as non-root users, the cache and log directories are set to the

homedirectory of the current user to avoid insufficient permission failures. - Improve the ability to import huge directory (contains more than 1 million files) into Redis and SQL databases.

- Add primary keys for all relational database tables to improve the log duplication performance. See here.

Upgrade tips

Please evaluate the following points before upgrading.

Ⅰ. Session format changes

JuiceFS changes its session format since v1.0 Beta3. Old clients cannot view new sessions by commands juicefs status or juicefs destroy, while the latest clients can view sessions in all formats.

Ⅱ. SQL table schema changes, support non UTF-8 encoded file names

To support non UTF-8 encoded file names better, JuiceFS v1.0 Beta3 changes the table schemas of relational databases.

For users using MySQL, MariaDB and PostgreSQL, if you need to use non UTF-8 encoded file names in your existing file systems, please alter your table schemas manually. See release notes for more details.

Fixed bugs

- Fix not releasing memory problem when failed to backup metadata.

- Fix wrong return values of scan function when using SQL as the metadata engine.

- Fix wrong values of some counters when load metadata by command

juicefs load. - Fix wrong scanning result of object list when enabling multiple buckets for object storage.

- Fix hang scanning problem when using Ceph RADOS as the object storage with too many objects.

For detailed bug fixing list, please refer to https://github.com/juicedata/juicefs/releases/tag/v1.0.0-beta3