Note: This article was first published on DZone and featured on its homepage.

The explosion in the popularity of ChatGPT has once again ignited a surge of excitement in the AI world. Over the past five years, AI has advanced rapidly and has found applications in a wide range of industries. As a storage company, we’ve had a front-row seat to this expansion, watching more and more AI startups and established players emerge across fields like autonomous driving, protein structure prediction, and quantitative investment.

AI scenarios have introduced new challenges to the field of data storage. Existing storage solutions are often inadequate to fully meet these demands.

In this article, we’ll deep dive into the storage challenges in AI scenarios, critical storage capabilities, and comparative analysis of Amazon S3, Alluxio, Amazon EFS, Azure, GCP Filestore, Lustre, Amazon FSx for Lustre, GPFS, BeeGFS, and JuiceFS Cloud Service. I hope this post will help you make informed choices in AI and data storage.

Storage challenges for AI

AI scenarios have brought new data patterns:

-

High throughput data access challenges: In AI scenarios, the growing use of GPUs by enterprises has outpaced the I/O capabilities of underlying storage systems. Enterprises require storage solutions that can provide high-throughput data access to fully leverage the computing power of GPUs. For instance, in smart manufacturing, where high-precision cameras capture images for defect detection models, the training dataset may consist of only 10,000 to 20,000 high-resolution images. Each image has several gigabytes in size, resulting in a total dataset size of 10 TB. If the storage system lacks the required throughput, it becomes a bottleneck during GPU training.

-

Managing storage for billions of files: AI scenarios need storage solutions that can handle and provide quick access to datasets with billions of files. For example, in autonomous driving, the training dataset consists of small images, each about several hundred kilobytes in size. A single training set comprises tens of millions of such images, each sized several hundred kilobytes. Each image is treated as an individual file. The total training data amounts to billions or even 10 billion files. This creates a major challenge in effectively managing large numbers of small files.

- Scalable throughput for hot data: In areas like quantitative investing, financial market data is smaller compared to computer vision datasets. However, this data must be shared among many research teams, leading to hotspots where disk throughput is fully used but still cannot satisfy the application's needs. This shows that we need storage solutions that can handle a lot of hot data quickly.

The basic computing environment has also changed a lot.

These days, with cloud computing and Kubernetes getting so popular, more and more AI companies are setting up their data pipelines on Kubernetes-based platforms. Algorithm engineers request resources on the platform, write code in Notebook to debug algorithms, use workflow engines like Argo and Airflow to plan data processing workflows, use Fluid to manage datasets, and use BentoML to deploy models into apps. Cloud-native technologies have become a standard consideration when building storage platforms. As cloud computing matures, AI businesses are increasingly relying on large-scale distributed clusters. With a significant increase in the number of nodes in these clusters, storage systems face new challenges related to handling concurrent access from tens of thousands of Pods within Kubernetes clusters.

IT professionals managing the underlying infrastructure face significant changes brought about by the evolving business scenarios and computing environments. Existing hardware-software coupled storage solutions often suffer from several pain points, such as no elasticity, no distributed high availability, and constraints on cluster scalability. Distributed file systems like GlusterFS, CephFS, and those designed for HPC such as Lustre, BeeGFS, and GPFS, are typically designed for physical machines and bare-metal disks. While they can deploy large capacity clusters, they cannot provide elastic capacity and flexible throughput, especially when dealing with storage demands in the order of tens of billions of files.

Key capabilities for AI data storage

Considering these challenges, we’ll outline essential storage capabilities critical for AI scenarios, helping enterprises make informed decisions when selecting storage products.

POSIX compatibility and data consistency

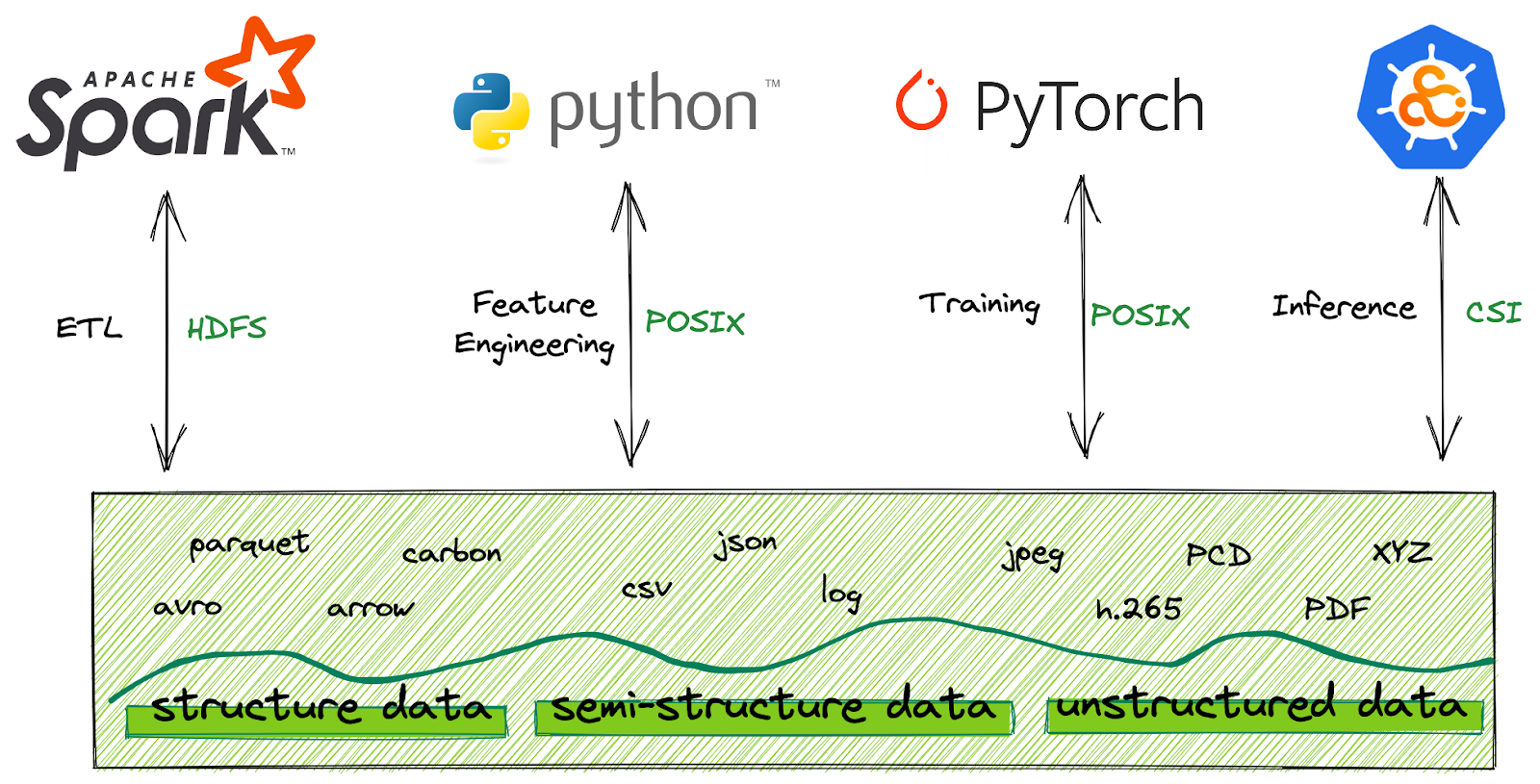

In the AI/ML domain, POSIX is the most common API for data access. Previous-generation distributed file systems, except HDFS, are also POSIX-compatible, but products on the cloud in recent years have not been consistent in their POSIX support:

-

Compatibility: Users should not solely rely on the description "POSIX-compatible product" to assess compatibility. You can use pjdfstest and the Linux Test Project (LTP) framework for testing. We’ve done a POSIX compatibility test of the cloud file system for your reference.

-

Strong data consistency guarantee: This is fundamental to ensuring computational correctness. Storage systems have various consistency implementations, with object storage systems often adopting eventual consistency, while file systems typically adhere to strong consistency. Careful consideration is needed when selecting a storage system.

-

User mode or kernel mode: Early developers favored kernel mode due to its potential for optimized I/O operations. However, in recent years, we’ve witnessed a growing number of developers "escaping" from kernel mode for several reasons:

-

Kernel mode usage ties the file system client to specific kernel versions. GPU and high-performance network card drivers often require compatibility with specific kernel versions. This combination of factors places a significant burden on kernel version selection and maintenance.

-

Exceptions of kernel mode clients can potentially freeze the host operating system. This is highly unfavorable for Kubernetes platforms.

-

The user-mode FUSE library has undergone continuous iterations, resulting in significant performance improvements. It has been well-supported among JuiceFS customers for various business needs, such as autonomous driving perception model training and quantitative investment strategy training. This demonstrates that in AI scenarios, the user-mode FUSE library is no longer a performance bottleneck.

Linear scalability of throughput

Different file systems employ different principles for scaling throughput. Previous-generation distributed storage systems like GlusterFS, CephFS, the HPC-oriented Lustre, BeeGFS, and GPFS primarily use all-flash solutions to build their clusters. In these systems, peak throughput equals the total performance of the disks in the cluster. To increase cluster throughput, users must scale the cluster by adding more disks.

However, when users have imbalanced needs for capacity and throughput, traditional file systems require scaling the entire cluster, leading to capacity wastage.

For example, for a 500 TB capacity cluster using 8 TB hard drives with 2 replicas, 126 drives with a throughput of 150 MB/s each are needed. The theoretical maximum throughput of the cluster is 18 GB/s (126 ×150 = 18 GB/s). If the application demands 60 GB/s throughput, there are two options:

-

Switching to 2 TB HDDs (with 150 MB/s throughput) and requiring 504 drives

-

Switching to 8 TB SATA SSDs (with 500 MB/s throughput) while maintaining 126 drives

The first solution increases the number of drives by four times, necessitating a corresponding increase in the number of cluster nodes. The second solution, upgrading to SSDs from HDDs, also results in a significant cost increase.

As you can see, it’s difficult to balance capacity, performance, and cost. Capacity planning based on these three perspectives becomes a challenge, because we cannot predict the development, changes, and details of the real business.

Therefore, decoupling storage capacity from performance scaling would be a more effective approach for businesses to address these challenges. When we designed JuiceFS, we considered this requirement.

In addition, handling hot data is a common problem in AI scenarios. JuiceFS employs a cache grouping mechanism to automatically distribute hot data to different cache groups. This means that JuiceFS automatically creates multiple copies of hot data during computation to achieve higher disk throughput, and these cache spaces are automatically reclaimed after computation.

Managing massive amounts of files

Efficiently managing a large number of files, such as 10 billion files has three demands on the storage system:

-

Elastic scalability: The real scenario of JuiceFS users is to expand from tens of millions of files to hundreds of millions of files and then to billions of files. This process is not possible by adding a few machines. Storage clusters need to add nodes to achieve horizontal scaling, enabling them to support business growth effectively.

-

Data distribution during horizontal scaling: During system scaling, data distribution rules based on directory name prefixes may lead to uneven data distribution.

-

Scaling complexity: As the number of files increases, the ease of system scaling, stability, and the availability of tools for managing storage clusters become vital considerations. Some systems become more fragile as file numbers reach billions. Ease of management and high stability are crucial for business growth.

Concurrent load capacity and feature support in Kubernetes environments

When we look at the specifications of the storage system, some storage system specifications specify the maximum limit for concurrent access. Users need to conduct stress testing based on their business. When there are more clients, quality of service (QoS) management is required, including traffic control for each client and temporary read/write blocking policies.

We must also note the design and supported features of CSI in Kubernetes. For example, the deployment method of the mounting process, whether it supports ReadWriteMany, subPath mounting, quotas, and hot updates.

Cost analysis

Cost analysis is a multifaceted concept, encompassing hardware and software procurement, often overshadowed by operational and maintenance expenses. As AI businesses scale, data volume grows significantly. Storage systems must exhibit both capacity and throughput scalability, offering ease of adjustment.

In the past, the procurement and scaling of systems like Ceph, Lustre, and BeeGFS in data centers involved lengthy planning cycles. It took months for hardware to arrive, be configured, and become operational. Time costs, notably ignored, were often the most significant expenditures. Storage systems that enable elastic capacity and performance adjustments equate to faster time-to-market.

Another frequently underestimated cost is efficiency. In AI workflows, the data pipeline is extensive, involving multiple interactions with the storage system. Each step, from data collection, clear conversion, labeling, feature extraction, training, backtesting, to production deployment, is affected by the storage system's efficiency.

However, businesses typically utilize only a fraction (often less than 20%) of the entire dataset actively. This subset of hot data demands high performance, while warm or cold data may be infrequently accessed or not accessed at all. It’s difficult to satisfy both requirements in systems like Ceph, Lustre, and BeeGFS.

Consequently, many teams adopt multiple storage systems to cater to diverse needs. A common strategy is to employ an object storage system for archival purposes to achieve large capacity and low costs. However, object storage is not typically known for high performance, and it may handle data ingestion, preprocessing, and cleansing in the data pipeline. While this may not be the most efficient method for data preprocessing, it's often the pragmatic choice due to the sheer volume of data. Engineers then have to wait for a substantial period to transfer the data to the file storage system used for model training.

Therefore, in addition to hardware and software costs of storage systems, total cost considerations should account for time costs invested in cluster operations (including procurement and supply chain management) and time spent managing data across multiple storage systems.

Storage system comparison

Here's a comparative analysis of the storage products mentioned earlier for your reference:

| Category | Product | POSIX compatibility | Elastic capacity | Maximum supported file count | Performance | Cost (USD) |

|---|---|---|---|---|---|---|

| Amazon S3 | Partially compatible through S3FS | Yes | Hundreds of billions | Medium to Low | About $0.02/GB/ month | |

| Alluxio | Partial compatibility | N/A | 1 billion | Depends on cache capacity | N/A | |

| Cloud file storage service | Amazon EFS | NFSv4.1 compatible | Yes | N/A | Depends on the data size. Throughput up to 3 GB/s, maximum 500 MB/s per client | $0.043~0.30/GB/month |

| Azure | SMB & NFS for Premium | Yes | 100 million | Performance scales with data capacity. See details | $0.16/GiB/month | |

| GCP Filestore | NFSv3 compatible | Maxmium 63.9 TB | Up to 67,108,864 files per 1 TiB capacity | Performance scales with data capacity. See details | $0.36/GiB/month | |

| Lustre | Lustre | Compatible | No | N/A | Depends on cluster disk count and performance | N/A |

| Amazon FSx for Lustre | Compatible | Manual scaling, 1,200 GiB increments | N/A | Multiple performance types of 50 MB~200 MB/s per 1 TB capacity | $0.073~0.6/GB/month | |

| GPFS | GPFS | Compatible | No | 10 billion | Depends on cluster disk count and performance | N/A |

| BeeGFS | Compatible | No | Billions | Depends on cluster disk count and performance | N/A | |

| JuiceFS Cloud Service | Compatible | Elastic capacity, no maximum limit | 10 billion | Depends on cache capacity | JuiceFS $0.02/GiB/month + AWS S3 $0.023/GiB/month |

Conclusion

Over the last decade, cloud computing has rapidly evolved. Previous-generation storage systems designed for data centers couldn't harness the advantages brought by the cloud, notably elasticity. Object storage, a newcomer, offers unparalleled scalability, availability, and cost-efficiency. Still, it exhibits limitations in AI scenarios.

File storage, on the other hand, presents invaluable benefits for AI and other computational use cases. Leveraging the cloud and its infrastructure efficiently to design the next-generation file storage system is a new challenge, and this is precisely what JuiceFS has been doing over the past five years.

If you have any questions for this article, feel free to join JuiceFS discussions on GitHub and community on Slack.