About the Author

Lanzhou He, Senior Development Engineer of Volcengine Edge Computing, is in charge of technology selection, development, and SRE of edge storage; Major research areas are distributed storage and distributed caching; Also a fan of cloud-native open source community.

Volcengine is a subsidiary of ByteDance, providing cloud-based services. Edge Rendering, a product of the Volcengine Edge Cloud can help users achieve easy scheduling of millions of rendering frame tasks, nearby scheduling of rendering tasks, and rendering multi-task multi-node parallel rendering, greatly improving rendering efficiency.

01 Storage Challenges for Edge Computing

Here is a brief overview of the problems encountered in edge rendering.

- The object storage and the file system metadata are not unified, and, thus, data cannot be directly manipulated through POSIX after uploading through object storage.

- It cannot meet the needs of high-throughput scenarios, especially when reading.

- The S3 API and POSIX interface have not been fully implemented.

To solve the storage problems encountered in edge rendering, the team spent almost half a year conducting storage selection tests. Initially, the team chose an in-house storage component that met our needs relatively well in terms of sustainability and performance.

When it comes to edge computing cases, there are two specific problems.

- First, the in-house components are designed for the IDC and have high requirements for machine specifications, which are difficult to meet.

- Second, the entire company's storage components are packaged together, including object storage, block storage, distributed storage, file storage, etc., while the edge side mainly requires file storage and object storage, which needs to be tailored and modified. Besides, it takes effort to achieve stability.

Based on those problems, the Volvengine team came up with a feasible solution after discussion: CephFS + MinIO gateway, with MinIO providing the object storage interface, and the final result is written to CephFS. The rendering engine mounts CephFS for rendering operations. During testing and validation, the performance of CephFS started to degrade with occasional lags when the files reached the 10 million level, which did not meet the requirements of the internal users.

After more than three months of testing, we landed on several core requirements for storage in edge rendering:

- Simple Operations and maintenance (O&M): storage developers can get started easily with the O&M documentation; future expansion and outage handling need to be simple enough.

- High data Reliability: as it provides storage service directly to users, the data written can not be lost or tampered with.

- The use of a single metadata engine to support object storage and file storage: it reduces complexity by eliminating the need to upload and download multiple times.

- Better performance for reads: Improve read performance as read occurs much more than write.

- Community activity: An active community means faster development, and is more helpful when problems occur.

02 Why JuiceFS

The Volcengine Edge Storage team learned about JuiceFS in September 2021 and had some communications with the Juicedata team. Thereafter, Volcengine decided to try JuiceFS.

They started with PoC testing in the test environment, mainly focusing on feasibility evaluation, the complexity of operation and deployment, application adoption, and whether it meets the application's needs.

Two sets of environments have been deployed, one based on a single-node Redis plus Ceph, and the other on a single-instance MySQL plus Ceph.

The overall deployment process is very smooth because Redis, MySQL, and Ceph (deployed via Rook) are relatively mature, the documentation for the deployment is comprehensive, and the JuiceFS client can easily work with these databases and Ceph.

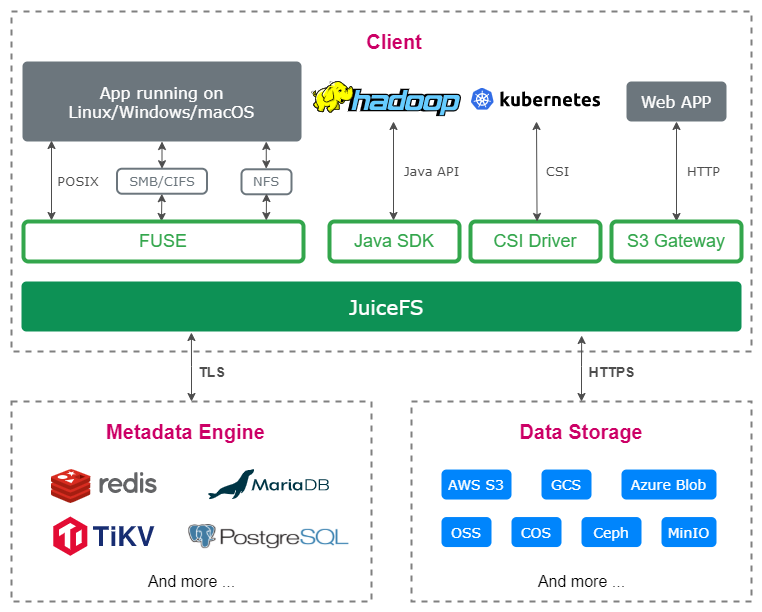

In terms of application adoption, Edge Cloud is based on cloud-native development and deployment; while JuiceFS supports S3 API, is fully compatible with POSIX protocol, and also supports Kubernetes CSI, which fully meets the application requirements of Edge Cloud.

After comprehensive testing, JuiceFS turned out to fully fit the needs of the application adoption side. It can also be deployed and run in production to meet the online needs of the application adoption side.

Application Process Optimization

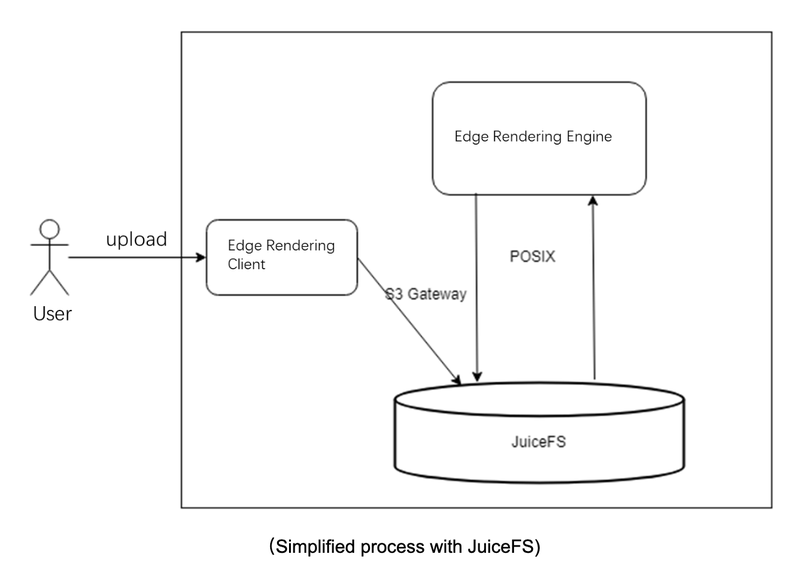

Before using JuiceFS, edge rendering mainly utilized ByteDance’sinternal object storage service (TOS), where users upload data to TOS, the rendering engine downloads the files to local, reads the local files, generates rendering results, and then uploads results back to TOS, and finally, users download the rendering results from TOS. The overall process involves many network requests and data copying, so any network jitter or high latency in the process will affect user experience.

After using JuiceFS, the data flow is greatly simplified: user uploads through the JuiceFS S3 gateway. Since JuiceFS supports both S3 and POSIX API, it can be mounted directly to the rendering engine, which reads and writes the files via POSIX, so that the end user downloads the rendering results directly from the JuiceFS S3 gateway, making the overall process more concise and efficient, as well as more stable.

Read file acceleration, large file sequential write acceleration

Thanks to the caching mechanism of the JuiceFS Client, we can cache frequently read files to the rendering engine, which greatly accelerates read speed. Our comparison test shows that using caching can improve throughput by about 3-5 times.

Similarly, because the write model of JuiceFS is to commit to memory first, when a chunk (default 64M) is full, or when the application calls close() or fsync(), the data is then uploaded to the object storage, and the file metadata is updated after the data is successfully uploaded. Therefore, when writing large files, they are written to memory first and then persisted to disk, which can greatly improve the writing speed of large files.

The current scenario of Edge is mainly rendering, where the file system reads much more than writes, and the files written are usually large. JuiceFS matches these scenarios very well.

03 How to use JuiceFS in Edge Storage

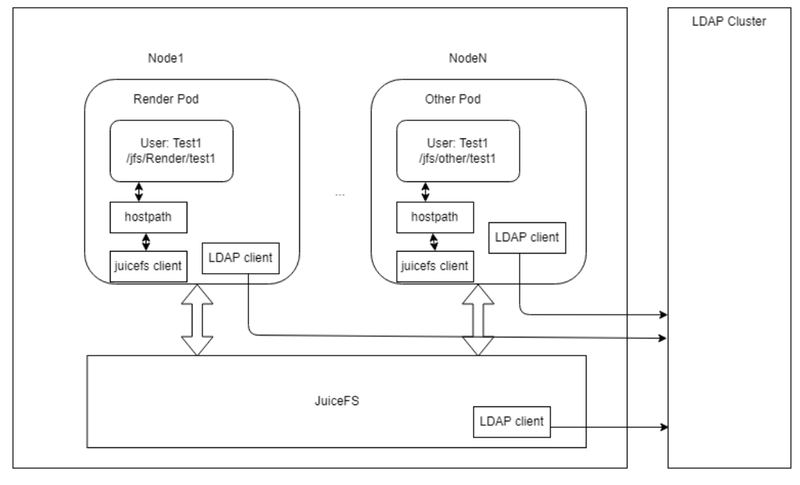

JuiceFS is primarily deployed on Kubernetes, with a DaemonSet responsible for mounting the JuiceFS file system and then providing hostPath to rendering engine pods. If the mount point fails, DaemonSet takes care of automatically restoring the mount point.

In terms of permission control, Edge Storage authenticates the identity of the JuiceFS cluster nodes through LDAP, and each JuiceFS Client authenticates with the LDAP through the LDAP client.

04 Hands-on experience in JuiceFS storage production environment

Metadata Engines

JuiceFS supports many databases as metadata engines (e.g. MySQL, Redis). MySQL is used in the production environment for the Volcengine Edge Storage as MySQL does a better job in terms of operations, data reliability, and transactions.

MySQL currently uses both single-instance and multi-instance (one master, two slaves) deployment, which is flexible for different Edge scenarios. In environments with low resources, it is possible to use single-instance deployment with a relatively stable MySQL throughput. Both deployment scenarios use high-performance cloud disk (provided by Ceph cluster) as MySQL data storage, ensuring data security.

In a resource-rich scenario, you can set up a multiple-instance deployment. The master-slave synchronization of multiple instances is achieved by the orchestrator component provided by MySQL Operator. It will be considered healthy only if all the two slave instances are synchronized successfully; however, as the timeout is also set, if the synchronization does not complete in time, it will trigger the alarm. When the disaster recovery solution becomes ready later, we may use the local disk as the MySQL data storage to further improve read and write performance, reducing latency and improving throughput.

MySQL single-instance Configuration

Container Resources.

- CPU: 8C

- Memory: 24G

- Disk: 100G (based on Ceph RBD, metadata takes about 30G for millions of files)

- Container image: mysql:5.7

MySQL's `my.cnf` configuration.

ignore-db-dir=lost+found # delete when using MySQL 8.0 and above.

max-connections=4000

innodb-buffer-pool-size=12884901888 # 12G

Object Storage

We use a Ceph cluster as object storage, which is deployed via Rook, and currently the production environment uses the Octopus release. With Rook, Ceph clusters can be operated and maintained in a cloud-native way, and Ceph components are managed through Kubernetes, which greatly reduces the complexity of Ceph cluster deployment and management.

Ceph server hardware configuration:

- 128-core CPU

- 512GB RAM

- System Disk: 2T * 1 NVMe SSD

- Data disk: 8T * 8 NVMe SSD

Ceph server software configuration:

- OS: Debian 9

- Kernel: modify `/proc/sys/kernel/pid_max`

- Ceph version: Octopus

- Ceph storage backend: BlueStore

- Ceph copies: 3

- Turn off automatic adjustment of Placement Group

The main focus of Edge rendering is low latency and high performance, so in terms of hardware selection, NVMe SSD disks are preferred, and Debian 9 is chosen as the operating system. Triple replication is configured for Ceph Because erasure code in edge computing environments might take up too many resources.

JuiceFS Client

The JuiceFS client works with Ceph RADOS (better performance than Ceph RGW), but this feature is not enabled by default in the official binary, so you need to recompile the JuiceFS client. Librados needs to be installed first. It is recommended to match the version of librados to the version of Ceph. Debian 9 does not come with a librados-dev package that matches the version of Ceph Octopus (v15.2.*), so you need to download it manually.

After installing librados-dev, you can start compiling the JuiceFS client. We use Go 1.19 for compiling, which can control the maximum memory allocation to prevent OOM in extreme cases where the JuiceFS client takes up too much memory.

make juicefs.ceph

You can create a file system and mount the JuiceFS on the computing node once the compiling is completed. Please refer to the JuiceFS official documentation.

05 Outlook

JuiceFS is a cloud-native distributed storage system, which provides CSI Driver to support cloud-native deployment methods. In terms of O&M, JuiceFS provides a lot of flexibility, and users can choose either cloud or on-premise deployment. JuiceFS is fully compatible with POSIX, thus file manipulations are very convenient. Although JuiceFS can come with high latency and low IOPS when reading and writing small random files because of object storage as back-end storage, it has a great advantage in read-only (or read-intensive) scenarios, which fits the application needs of edge rendering scenarios very well.

The future collaboration between Volcengine Edge Cloud and JuiceFS will focus on the following aspects.

- more cloud-native: Volvengine currently uses JuiceFS via hostPath, and later may switch to JuiceFS CSI Driver for elastic scaling scenarios.

- Metadata engine upgrade: Extract metadata service into a gRPC service, providing multi-level caching capabilities for better performance in read-intensive scenarios. The underlying metadata engine might migrate to TiKV for better scaling compared to MySQL.

- New features and bug fixes: for the current scenario, some features will be added and some bugs will be fixed, we expect to contribute PR to the community and give back to the community.