Shopee ClickHouse is a highly available distributed analytical database based on the open source database ClickHouse for secondary development and architectural evolution. This article will focus on Shopee ClickHouse's hot and cold storage architecture and the practices that support the company's business.

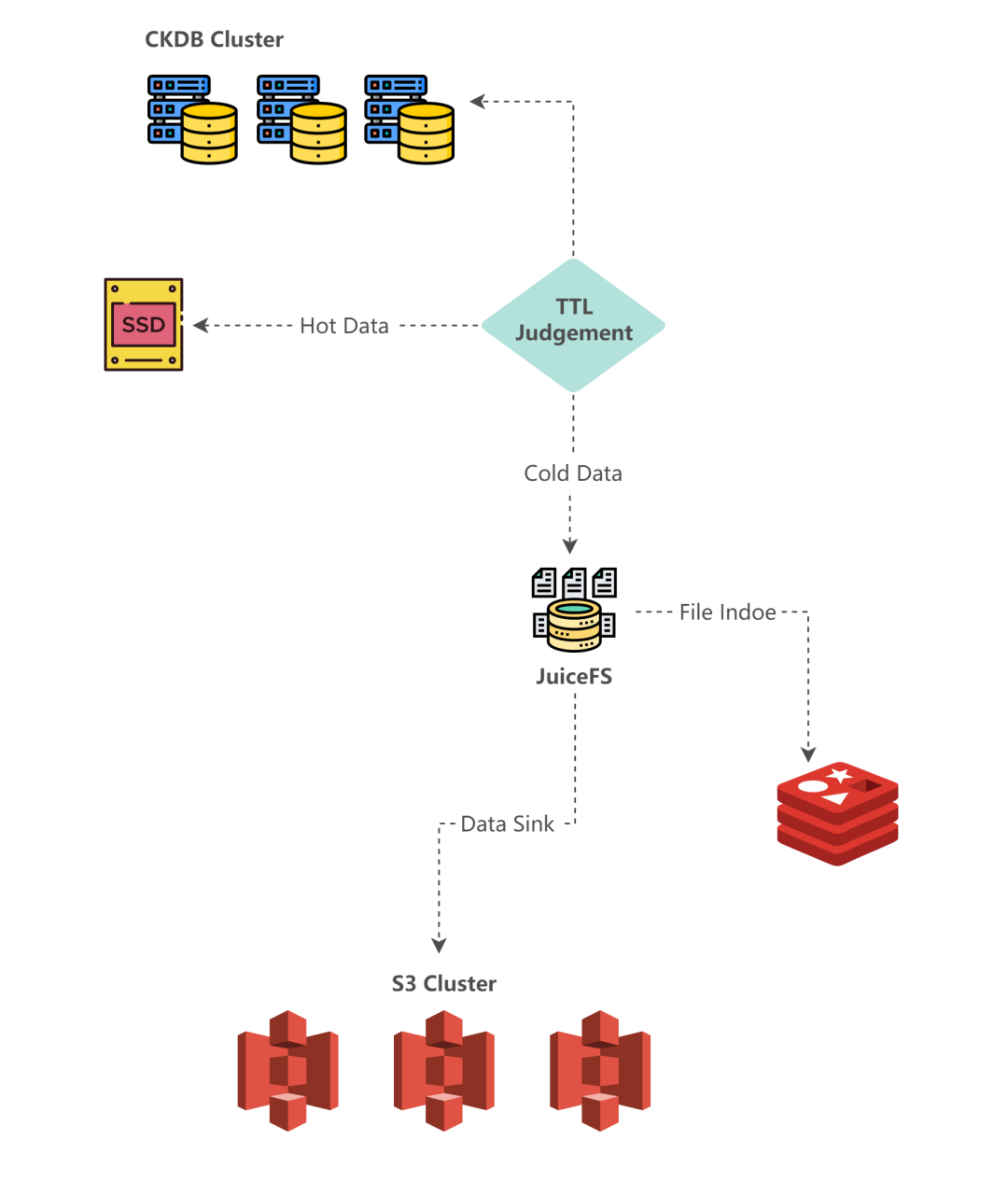

Shopee ClickHouse's hot and cold storage architecture uses JuiceFS clients to mount object storage to local hosts, and by writing ClickHouse storage policies, object storage can be used like multi-volume storage. Because we use the same ClickHouse DB cluster to support multiple teams' businesses, the hot and cold data separation benchmark may be different between different teams or even different businesses of the same team, so the data hot and cold separation policy needs to be done at the table level of ClickHouse.

In order to achieve hot and cold separation at the table level, we modify the storage policy of tables according to the storage policy edited in advance for the stock of business tables that need to do hot and cold separation. For new business tables that require hot/cold separation, we specify a storage policy that supports data on remote storage when building the tables, and then determine whether the data should be on the local or remote side by refining the TTL expressions.

After the hot and cold storage architecture went live, we encountered some problems and challenges, such as juicefs object request error, Redis memory growth exceptions, suspicious broken parts, and so on.This article will address some of these issues and provide solutions with scenarios and source code analysis.

In general, the overall design idea of Shopee ClickHouse hot and cold storage architecture is: local SSD storage for hot data with high frequency query, and remote storage for relatively less frequently used data, thus saving storage costs and supporting more data storage needs.

Shopee ClickHouse cluster general architecture

ClickHouse is an open source OLAP (On-Line Analytic Query) type database that implements a vectorized execution engine with excellent AP query performance. Shopee ClickHouse is an analytic database based on ClickHouse that continues to do secondary development and evolves the product architecture.

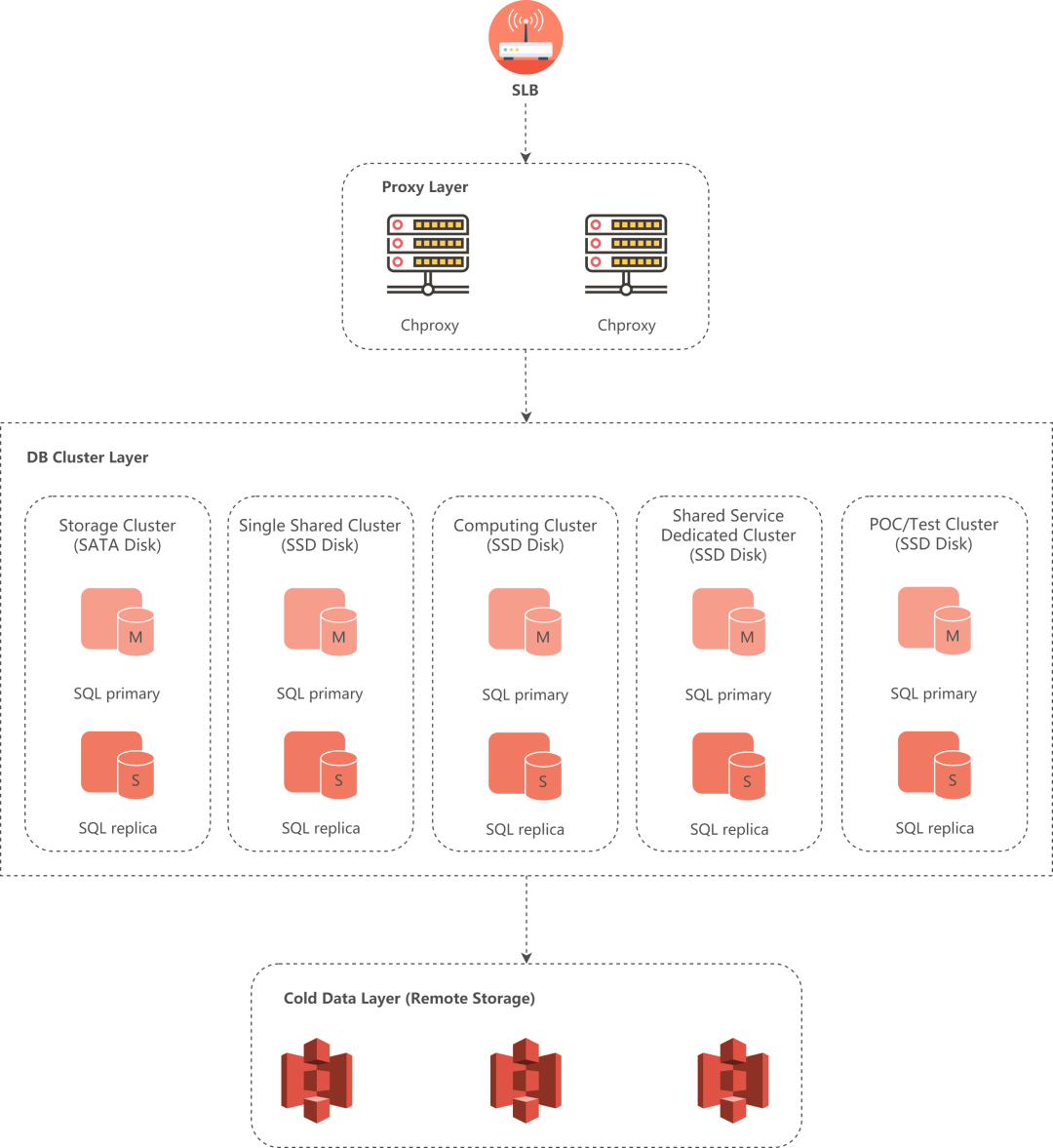

The following diagram illustrates the architecture of the Shopee ClickHouse DB cluster.

From top to bottom are the user request intervention SLB, the Proxy layer, the ClickHouse DB cluster layer, and at the bottom, the remote object storage is S3 provided by the Shopee STO team.

Among them, SLB provides user request routing; Proxy layer provides query routing, requests will be routed to the corresponding cluster based on the cluster name in the user connection string, while providing the ability to partially write balance and query routing; ClickHouse DB cluster layer is a distributed Shopee ClickHouse database consisting of clusters, currently there are computational distributed clusters with SSD disks as hot data storage medium and computational single node clusters, and storage distributed clusters with SATA Disk as storage medium; the remote storage at the bottom is used as cold data storage.

2. Hot and cold storage architecture solution

Users want to store more and longer data and query faster. But usually the more data is stored, the higher the return latency will be for the same query conditions.

In terms of resource utilization, we wanted the data stored on Shopee ClickHouse to be more accessible and available to provide broader support to the business. Therefore, in the early stages we asked the business side to store users' hot data to Shopee ClickHouse.

But this also brings some problems, for example: users sometimes need to query a relatively long time data for analysis, which requires the data not in ClickHouse first imported before doing analysis, and after the analysis to delete this part of the data. Another example: some of the business through the log service to do aggregate analysis and retrieval analysis, but also need a relatively long time log data to help supervise and analyze the daily business.

Based on such needs, we want to maximize the use of resources on the one hand, and on the other hand, we want to support more data storage volume without affecting the query speed of hot data for users, so using hot and cold data separation storage architecture is a good choice.

Typically, the design of a hot and cold separation scheme requires consideration of the following issues.

- How to store cold data?

- How to use cold storage efficiently, consistently and simply?

- How can hot data be sunk to cold storage?

- How to evolve the architecture without affecting the existing user business?

And the selection of cold data storage media generally considers the following key points.

- Cost

- Stability

- Full functionality (data can still be correctly queried during the sinking process, and the data in the database can be correctly written)

- Performance

- Scalability

2.1 Cold Storage Media Selection and JuiceFS

The media that can be used for cold storage are S3, Ozone, HDFS, Hard Disk. Hard Disk is hard to scale and can be excluded first, HDFS, Ozone and S3 are better cold storage media.

Meanwhile, to use cold storage easily and efficiently, we focus on JuiceFS, an open source POSIX file system built on object storage and database, which allows us to access remote object storage more easily and efficiently.

JuiceFS supports almost all public cloud object storage, such as S3, GCS, OSS and so on.JuiceFS supports Redis, MySQL, PostgreSQL and other databases as the engine for storing metadata. We finally chose Redis because Redis runs entirely in memory, which can meet the low latency and high IOPS for metadata reads and writes, supports optimistic transactions, and satisfies the atomicity of metadata operations in the file system [1].

JuiceFS provides an efficient and convenient way to access remote storage by simply mounting it locally using the format and mount commands through the JuiceFS client. This will allow our ClickHouse to read and write remote storage as if it were local storage.

Once we have selected JuiceFS, next we need to choose the right backend storage medium. Since JuiceFS mainly uses object storage as backend storage, for us we need to choose between S3 and Ozone. We designed a benchmark as follows, using ClickHouse TPCH Star Schema Benchmark 1000s (benchmark details can be found in ClickHouse community documentation [2]) as test data to test the Insert performance of S3 and Ozone respectively, and using Star Schema Benchmark select statement for query performance comparison.

The query data is in the following three storage states.

- Part in Ozone/S3 and part in the local SSD disk.

- All on Ozone/S3.

- All on SSD.

The following are the results of our test sampling.

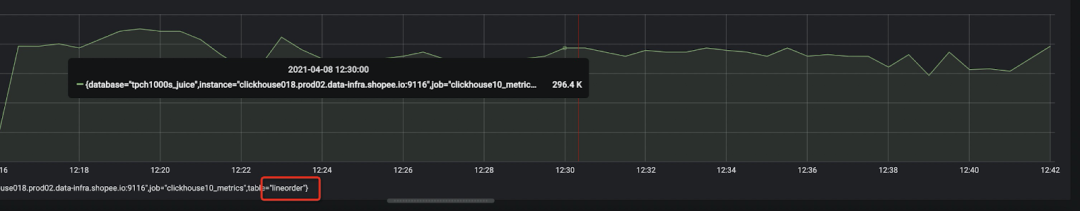

(1) Insert performance sampling results

Insert Lineorder table data to Ozone:

Insert Lineorder table data to S3:

As you can see, S3's Insert performance is slightly stronger.

(2) Query Performance Sampling Results

In accordance with ClickHouse Star Schema Benchmark, after the tables Customer, Lineorder, Part, Supplier have been imported, a flattened wide table needs to be created again based on the data from the four tables.

CREATE TABLE lineorder_flat

ENGINE = MergeTree

PARTITION BY toYear(LO_ORDERDATE)

ORDER BY (LO_ORDERDATE, LO_ORDERKEY)

AS

SELECT

l.LO_ORDERKEY AS LO_ORDERKEY,

l.LO_LINENUMBER AS LO_LINENUMBER,

l.LO_CUSTKEY AS LO_CUSTKEY,

l.LO_PARTKEY AS LO_PARTKEY,

l.LO_SUPPKEY AS LO_SUPPKEY,

l.LO_ORDERDATE AS LO_ORDERDATE,

l.LO_ORDERPRIORITY AS LO_ORDERPRIORITY,

l.LO_SHIPPRIORITY AS LO_SHIPPRIORITY,

l.LO_QUANTITY AS LO_QUANTITY,

l.LO_EXTENDEDPRICE AS LO_EXTENDEDPRICE,

l.LO_ORDTOTALPRICE AS LO_ORDTOTALPRICE,

l.LO_DISCOUNT AS LO_DISCOUNT,

l.LO_REVENUE AS LO_REVENUE,

l.LO_SUPPLYCOST AS LO_SUPPLYCOST,

l.LO_TAX AS LO_TAX,

l.LO_COMMITDATE AS LO_COMMITDATE,

l.LO_SHIPMODE AS LO_SHIPMODE,

c.C_NAME AS C_NAME,

c.C_ADDRESS AS C_ADDRESS,

c.C_CITY AS C_CITY,

c.C_NATION AS C_NATION,

c.C_REGION AS C_REGION,

c.C_PHONE AS C_PHONE,

c.C_MKTSEGMENT AS C_MKTSEGMENT,

s.S_NAME AS S_NAME,

s.S_ADDRESS AS S_ADDRESS,

s.S_CITY AS S_CITY,

s.S_NATION AS S_NATION,

s.S_REGION AS S_REGION,

s.S_PHONE AS S_PHONE,

p.P_NAME AS P_NAME,

p.P_MFGR AS P_MFGR,

p.P_CATEGORY AS P_CATEGORY,

p.P_BRAND AS P_BRAND,

p.P_COLOR AS P_COLOR,

p.P_TYPE AS P_TYPE,

p.P_SIZE AS P_SIZE,

p.P_CONTAINER AS P_CONTAINER

FROM lineorder AS l

INNER JOIN customer AS c ON c.C_CUSTKEY = l.LO_CUSTKEY

INNER JOIN supplier AS s ON s.S_SUPPKEY = l.LO_SUPPKEY

INNER JOIN part AS p ON p.P_PARTKEY = l.LO_PARTKEYThe following SQL statement was executed and the following Error occurred when the data was all on the Ozone.

Code: 246. DB::Exception: Received from localhost:9000. DB::Exception: Bad size of marks file '/mnt/jfs/data/tpch1000s_juice/customer/all_19_24_1/C_CUSTKEY.mrk2': 0, must be: 18480A portion of the Select data is in the Ozone, and a data sink from the SSD disk to the Ozone occurs during this process.

Result: Hang live, unable to query.

When we did this test, we used the community version 1.1.0-SNAPSHOT of Ozone, and the results of this test only show that this version is not suitable for our scenario.

Since Ozone 1.1.0-SNAPSHOT has functional shortcomings in our usage scenario, the subsequent Star Schema Benchmark performance test report focuses on SSD and S3 performance comparisons (detailed Query SQL statements are available from the ClickHouse community documentation).

Query No.Query Latency Data on JuiceFSQuery Latency Data on ⅓ JuiceFs + ⅔ SSDQuery Latency Data on SSDQ1.18.884 s8.966 s1.417 sQ1.20.921 s0.998 s0.313 sQ1.30.551 s0.611 s0.125 sQ2.168.148 s36.273 s5.450 sQ2.254.360 s20.846 s4.557 sQ2.355.329 s22.152 s4.297 sQ3.160.796 s27.585 s7.999 sQ3.267.559 s29.123 s5.928 sQ3.345.917 s20.682 s5.606 sQ3.40.675 s0.202 s0.188 sQ4.1100.644 s41.498 s7.019 sQ4.232.294 s2.952 s2.464 sQ4.333.667 s2.813 s2.357 s

Finally, after comparing all aspects, we choose S3 as the cold storage medium.

Therefore, the Cold and Hot storage separation solution is implemented by JuiceFS+S3, and the implementation process is briefly described below.

2.2 Implementation of hot and cold data storage separation

First, we mount the S3 bucket to the local /mnt/jfs directory by using the JuiceFS client, and then edit the ClickHouse storage policy configuration file . /config.d/storage.xml. Write it in such a way that it does not affect the historical user storage (i.e. retains the previous storage policy). Here, default is our historical storage policy and hcs_ck is the hot/cold separated storage policy.

For details, you can refer to the following figure.

If you have a business that needs to separate hot and cold storage, just write the storage policy as hcs_ck in Statement, and then control the cold data sink policy with a TTL expression.

For example, the table hcs_table_name is a business log data table that needs to separate hot and cold storage, and the following is the table build statement.

CREATE TABLE db_name.hcs_table_name

(

.....

`log_time` DateTime64(3),

`log_level` String,

.....

`create_time` DateTime DEFAULT now()

)

ENGINE = ReplicatedMergeTree('/clickhouse/tables/{layer}-{shard}/db_name.hcs_table_name

', '{replica}')

PARTITION BY toYYYYMMDD(log_time)

ORDER BY (ugi, ip)

TTL toDateTime(log_time) TO VOLUME 'v_ssd',

toDateTime(log_time) + toIntervalDay(7) TO VOLUME 'v_cold',

toDateTime(log_time) + toIntervalDay(14)

SETTINGS index_granularity = 16384,

storage_policy = 'hcs_ck',

parts_to_throw_insert = 1600The TTL expression above shows that the table hcs_table_name specifies that data for the last 7 days is stored on the local SSD disk, data for 8 to 14 days is stored on the remote S3, and data older than 14 days is deleted.

The general flow is shown in the following diagram.

The data parts of the table hcs_table_name (ClickHouse's data storage uses the data part as the basic processing unit) are scheduled by a background task, which is executed by the thread BgMoveProcPool from the back_ground_move_pool (note that y back_ground_pool is different).

std::optional<BackgroundProcessingPool> background_move_pool; /// The thread pool for the background moves performed by the tables.The background task scheduler determines whether the data parts need to be moved (does the data need to be sunk to a remote store) and whether it is possible to move.

The core logic of this task is to first determine the data parts that need to be moved, and then move those data parts to the target stroage.

The interface:

MergeTreePartsMover::selectPartsForMoveGet ttl_entry according to the TTL expression, then select the data parts that need to be moved according to the ttl_move information in the data parts, store the move_entry of the data parts (including the IMergeTreeDataPart pointer and the size of the storage space to be reserved) into the vector.

Afterwards, the interface is called.

MergeTreeData::movePartsThe move process is simply clone the data parts on the SSD disk to the detach directory of the hcs_table_name table in the remote S3 storage, and then move the data parts out of the detach directory, and finally these data parts on the SSD disk will be cleared in the destructor of IMergeTreeDataPart.

So the table is always available during the whole move process. Since it is a clone operation, the data parts moved at the same time are either active on the SSD disk or active on the remote storage.

For the move information of the table data parts, you can also query the following three fields of the system table system.parts.

move_ttl_info.expression;

move_ttl_info.min;

move_ttl_info.max;3. Practice

After the Shopee ClickHouse cold and hot data separation storage architecture went live, we summarized some of the problems we encountered in practice.

3.1 Redis memory growth anomaly

The amount of data stored on S3 did not grow much, but Redis memory continued to grow at a high rate.

The main reason for this is that JuiceFS uses Redis to store the metadata of the data files on S3, so normally, the more data files on S3, the more Redis storage is used. Usually this anomaly is due to the fact that the target table has many small files that are sunk directly without merge, which can easily fill up Redis.

This also introduces another problem: once Redis memory is full, JuiceFS can no longer successfully write data to S3. If you unmount the JuiceFS storage, you will not be able to remount it, and when you mount it again, it will throw Error.

Meta: create session: OOM command not allowed when used memory > 'maxmemory'.To avoid this problem, you should first monitor the status of ClickHouse merge. clickhouse-exporter collects a merge metric clickhouse_merge, which captures the number of merges that are currently triggered (by querying the system.metrics with metric='merge '), and for each merge trigger, multiple data parts of a table will be merged. In our experience, if the average number of merges every three hours is less than 0.5, then it is likely that there is a merge problem on this machine.

There are many possible reasons for merge exceptions, such as HTTPHandler threads or ZooKeeperRecv threads continuously taking up a lot of CPU resources. This is not the focus of this article and will not be expanded here.

We can set an alert rule to alert the ClickHouse development and operations team if the number of merges is less than 0.5 in three hours, avoiding the creation of a large number of small files.

What should I do if there are already a lot of small files sinking into S3?

First of all, to stop the data from continuing to sink, you can find the user business table with a large number of small files sinking in two ways.

The first way: check ClickHouse Error Log, find the table that throws too many parts, and then further determine if the table that throws the error has hot and cold storage.

The second way: Find the table that has active parts that is clearly too many and disk_name that is equal to the alias of the cold storage by querying the system.parts table. After locating the table that is generating a lot of small files, stop the data sink with the ClickHouse system command to avoid Redis memory hitting full capacity.

SYSTEM STOP MOVES [[db.]merge_tree_family_table_name]If the table is small, say less than 1TB after compression (1TB here is an empirical value, we have used insert into ... select * from ... to import table data, if it is larger than 1TB, the import time will be very long and import failure may occur), after confirming that merge function is back to normal, you can choose to create temp table > insert into this temp table > select * from org table, and then drop org table > rename temp table to org table.

If the table is large, try to wake up the merge thread with the following system command after confirming that the merge function is back to normal.

SYSTEM START MERGES [[db.]merge_tree_family_table_name]If merge is slow, you can query the system.parts table to find the data parts that are already on S3, and then manually execute a query to move the small files that are on S3 back to the SSD:

ALTER TABLE table_source MOVE PART/PARTITION partition_expr TO volume 'ssd_volume'Since SSD has much higher IOPS than S3, this will speed up the merge process, and at the same time, moving the data off S3 will free up Redis memory.

3.2 Failed to read or write S3

When a data sink failure occurs, S3 is accessed through JuiceFS and no read or write operations can be performed on S3. In this case, if the data required by the query is located on S3, an S3 mount point inaccessible error will be thrown.

To solve this problem, you can first check the JuiceFS logs.

The JuiceFS logs are stored on syslog in Linux CentOS, and you can query the logs by `cat/var/log/messages|grep 'juicefs', and the corresponding log directories for different operating systems can be found in the JuiceFS community documentation [3].

The problem we encountered was send request to S3 host name certificate expired. We solved this problem by talking to the S3 development and maintenance team.

So how to monitor this kind of JuiceFS read/write S3 failure? You can monitor it with the metrics juicefs_object_request_errors provided by JuiceFS, and alert the team members if there is an error, and check the logs to locate the problem in time.

3.3 clickhouse-server startup failure

Another issue we encountered during testing was that when modifying the TTL of a replicated table (table engine with the Replicated prefix) that requires hot and cold data storage separation for historical tables, the TTL expressions in the metadata of the local .sql file of clickhouse-server did not match the TTL expressions stored on ZooKeeper.

If you restart clickhouse-server without resolving this issue, clickhouse-server will fail to start because the table structures are not aligned.

The main reason for this problem is that changes to the TTL of replicated tables modify the TTL within ZooKeeper before modifying the TTL of hosts within the same node, so if the TTL of the local machine is not modified successfully after the TTL is modified and clickhouse-server is restarted, the above problem will occur.

3.4 suspicious_broken_parts

Restarting clickhouse-server failed, throwing Error.

DB::Exception: Suspiciously many broken parts to removeThis is because ClickHouse reloads the MergeTree table engine data when restarting the service, the main code interface is

MergeTreeData::loadDataParts(bool skip_sanity_checks)In this interface, it will get the data parts of each table, determine whether there is #DELETE_ON_DESTROY_MARKER_PATH under the data part folder, i.e. check whether the delete-on-destroy.txt file exists. If it exists, the part is added to broken_parts_to_detach and the number of suspicious_broken_parts statistics is added by 1.

Then, in the scenario of hot and cold data storage separation, when data parts are sunk by TTL, the following code calls are made in the core interface move function.

MergeTreeData::moveParts->MergeTreePartsMover::swapClonedPart->MergeTreeData::swapActivePartSwap the paths of active parts in the last function. That is, as mentioned above, data parts are traceable during the move, either as active in SSD or active in S3.

void MergeTreeData::swapActivePart(MergeTreeData::DataPartPtr part_copy)

{

auto lock = lockParts();

for (auto original_active_part : getDataPartsStateRange(DataPartState::Committed)) // NOLINT (copy is intended)

{

if (part_copy->name == original_active_part->name)

{

.....

String marker_path = original_active_part->getFullRelativePath() + DELETE_ON_DESTROY_MARKER_PATH;

try

{

disk->createFile(marker_path);

}

catch (Poco::Exception & e)

...

}In this interface, the #DELETE_ON_DESTROY_MARKER_PATH file is created in the old active parts (i.e. replacing parts), thus modifying the state to DeleteOnDestory, which is used to delete the data parts of the state later when the IMergeTreeDataPart is parsed.

This is the reason why suspicious_broken_parts appears in our usage scenario, the default threshold for this value is 10, and when it is exceeded it will affect ClickHouse service startup.

There are two solutions: The first is to delete the metadata .sql file, stored data, metadata on ZooKeeper for the table that threw the error on this machine. Reboot the machine and rebuild the table and the data will be synced from the backup machine. The second one is to create force_restore_data flag under ClickHouse /flags path by the user running clickhouse-server process and then just reboot.

As you can see from the above question, after using JuiceFS+S3 to implement a separate storage architecture for hot and cold data, new components (JuiceFS+Redis+S3) were introduced, and the database usage scenarios became more flexible, and accordingly, all aspects of monitoring information should be done. A few of the more important monitoring metrics are shared here.

- JuiceFS:

juicefs_object_request_errors: JuiceFS health status monitoring of S3 reads and writes. - Redis:

Memory Usage: monitoring the memory usage of Redis. - ClickHouse:

clickhouse_merge: monitors the merge status of machines in the cluster to see if it is working properly.

4. Summary of benefits

With the separation of hot and cold data storage, we have better supported our users' data business, improved the overall cluster data storage capacity, relieved the local storage pressure on individual machines, and provided more flexible management of business data.

Before the hot and cold data separation architecture went online, the average disk utilization rate of our cluster machines was close to 85%. After going online, this figure dropped to 75% by modifying the business user table TTL. And the overall cluster supported two new data services on top of the original business volume.Without going live with the hot and cold storage architecture, our cluster would have been unable to take on the new projects due to insufficient disk usage before the expansion. Currently, we are sinking more than 90TB of data (after compression) to the remote S3.

In the future, Shopee ClickHouse will continue to develop more useful features and will continue to evolve the product architecture. Currently JuiceFS is very stable in our production environment and we will further use JuiceFS to access HDFS and thus implement the Shopee ClickHouse storage compute separation architecture.

The version information of each product component mentioned in this article is as follows:

- Shopee ClickHouse: Currently based on the community version ClickHouse 20.8.12.2-LTS version

- JuiceFS: v0.14.2

- Redis: v6.2.2, sentinel model, enable AOF (the policy is Every Secs), and enable RDB (the policy is one backup per day)

- S3: Provided by the Shopee STO team

- Ozone: 1.1.0-SNAPSHOT

Related Links

- JuiceFS: https://github.com/juicedata/juicefs/blob/main/docs/zh_cn/redis_best_practices.md

- ClickHouse Community Documentation: https://clickhouse.tech/docs/en/getting-started/example-datasets/star-schema

- JuiceFS Community Documentation: https://github.com/juicedata/juicefs/blob/main/docs/zh_cn/fault_diagnosis_and_analysis.md

Author of this article

Teng, graduated from National University of Singapore, is from Shopee Data Infra team.

Original URL

https://mp.weixin.qq.com/s/eELcw0v2U9UXaHnfADj3tQ

English translator

JuiceFS team