This article is translated from the blog post published on ArthurChiao's Blog.

JuiceFS is a distributed file system built on top of object storage systems (like S3, Ceph, and OSS). Simply put:

- Object storage: Can only be accessed via key-value pairs.

- File system: Provides a familiar directory structure that allows operations like

ls,cat,find, andtruncate.

This article provides a high-level overview of what happens in the JuiceFS CSI solution when creating a pod with a Persistent Volume (PV) and how the pod reads and writes to the PV. It aims to facilitate a quick understanding of the K8s CSI mechanism and the basic working principles of JuiceFS.

Background knowledge

K8s CSI

The Container Storage Interface (CSI) is a standard for exposing arbitrary block and file storage systems to containerized workloads on Container Orchestration Systems (COS) like Kubernetes (K8s).

—Kubernetes CSI Developer Documentation

CSI is a storage mechanism supported by K8s that offers excellent extensibility. Any storage solution can integrate with K8s by implementing certain interfaces according to the specification.

Generally, a storage solution needs to deploy a service known as a "CSI plugin" on each node. The kubelet calls this plugin during the creation of pods with PVs. Note that:

- K8s' CNI plugins are executable files placed in

/opt/cni/bin/, which the kubelet directly runs when creating pod networks. - K8s' CSI plugins are services (which might be better understood as agents), and the kubelet communicates with them via gRPC during PV initialization.

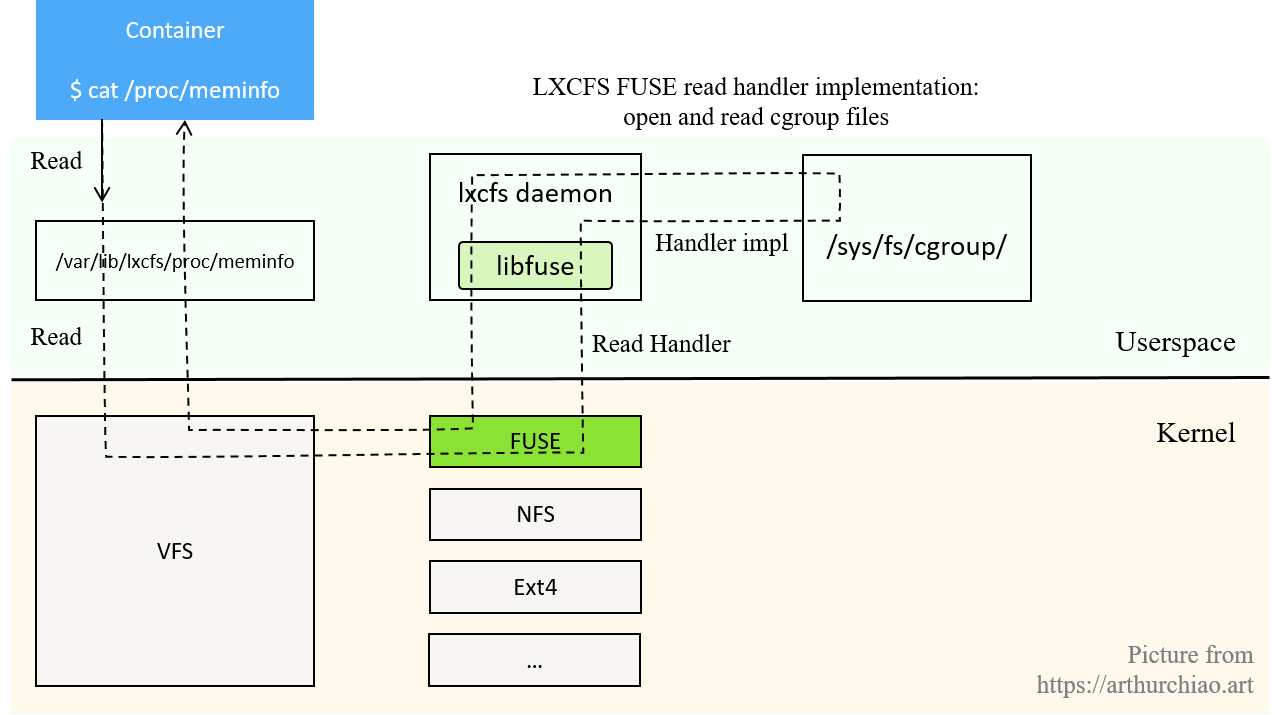

FUSE

Filesystem in Userspace (FUSE) is a user-space file system that makes it easy for users to develop their own file systems.

Here’s a basic overview using lxcfs (which is unrelated to JuiceFS but is also a FUSE file system) to illustrate FUSE's working principle:

JuiceFS is based on FUSE to implement a user-space filesystem. Here’s a brief overview from community documentation: Traditionally, implementing a FUSE file system requires using Linux libfuse. It provides two types of APIs:

- High-level APIs: Based on file names and paths. The internal libfuse simulates a virtual file system (VFS) tree and exposes path-based APIs. This is suitable for systems where metadata is provided via path-based APIs, like HDFS or S3. If the metadata is based on an inode directory tree, the conversion from inodes to path and back to inodes can impact performance.

- Low-level APIs: Based on inodes. The kernel's VFS interacts with the FUSE library using low-level APIs.

JuiceFS organizes its metadata based on inodes, so it uses the low-level API, relying on go-fuse instead of libfuse, which is simpler and performs better.

JuiceFS modes of operation

JuiceFS has several deployment modes:

Process mount mode: The JuiceFS client runs in the CSI Node plugin container, mounting all required JuiceFS PVs within this container in process mode. CSI mode, which can be further divided into two types:

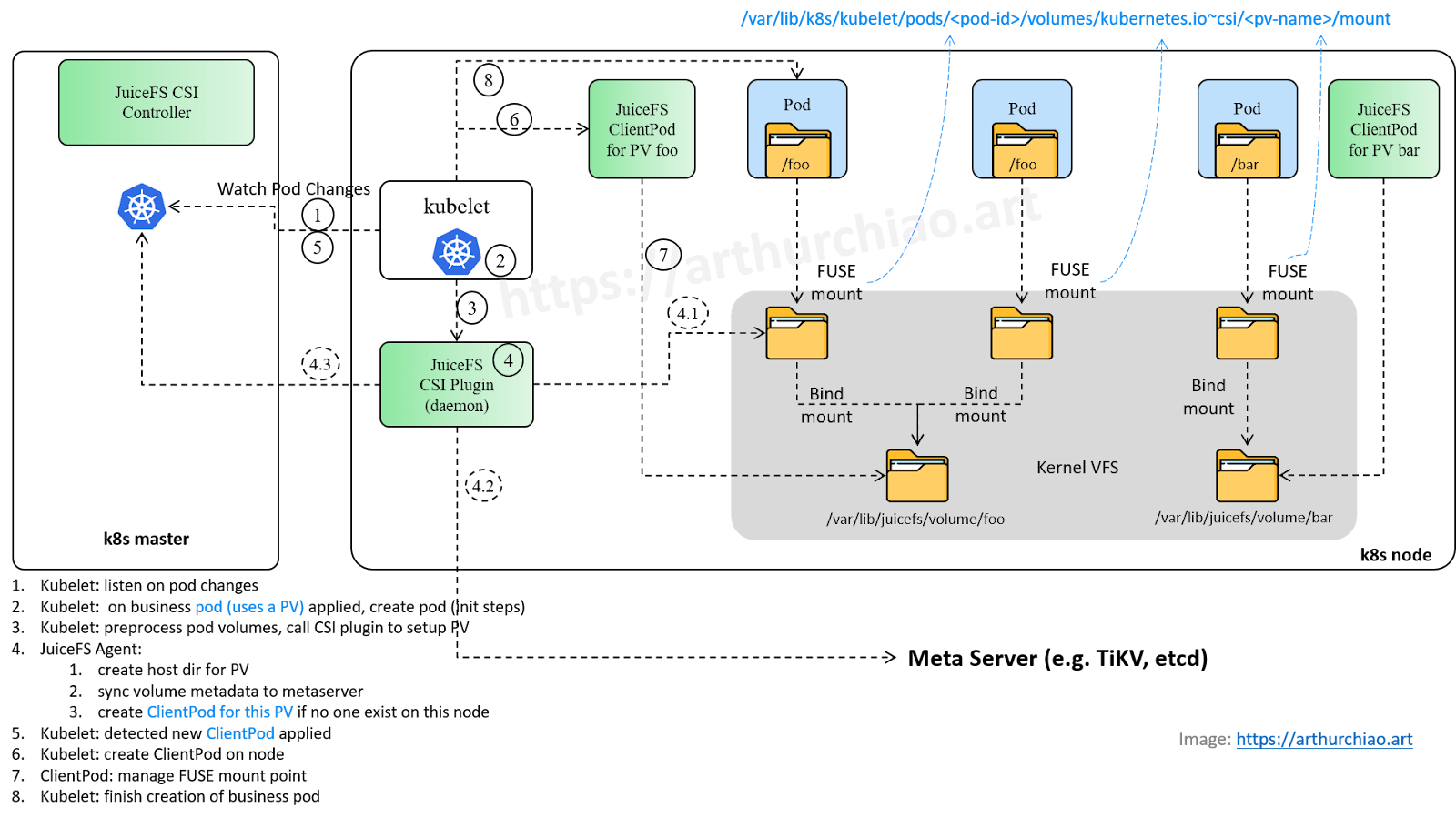

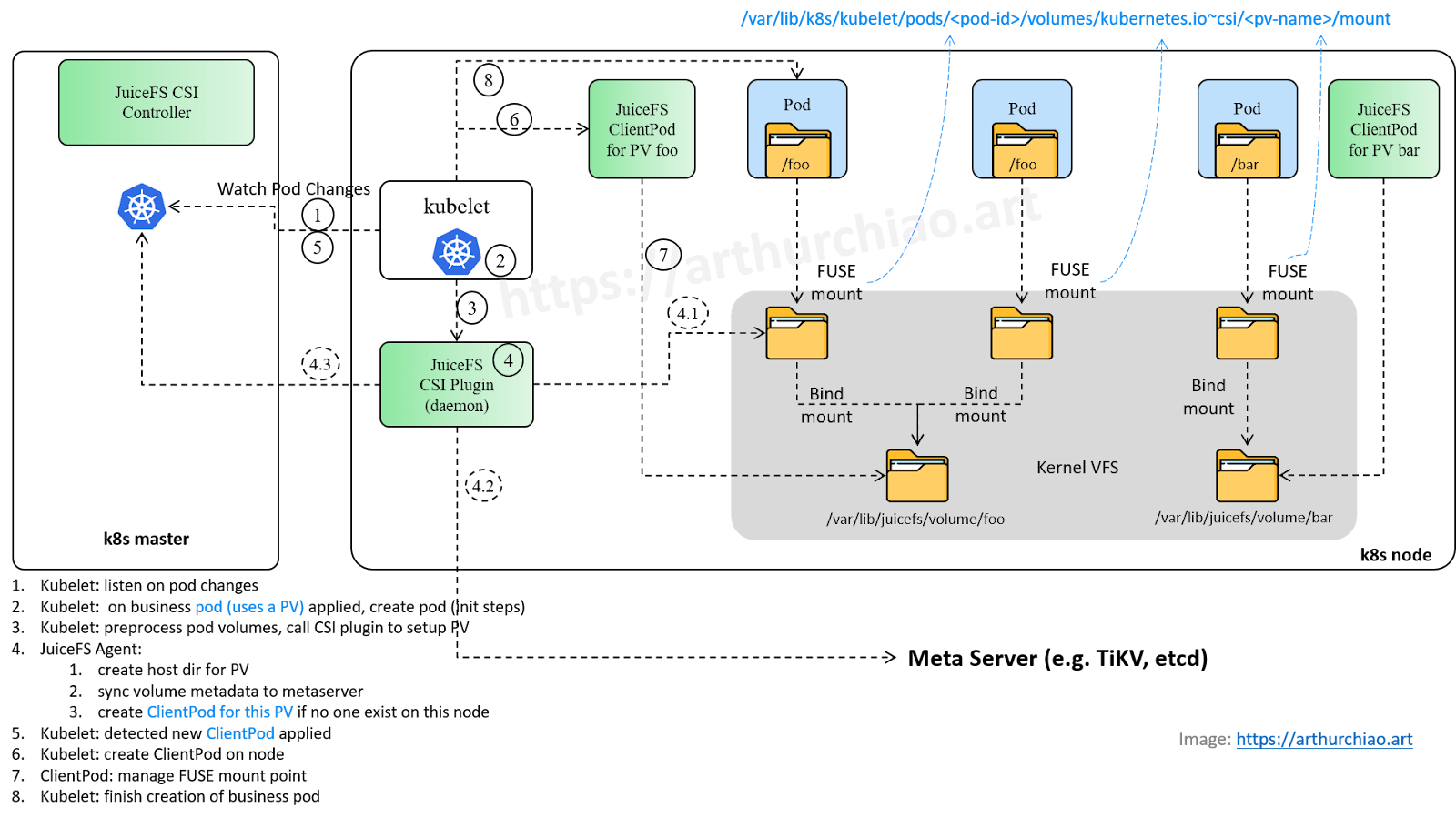

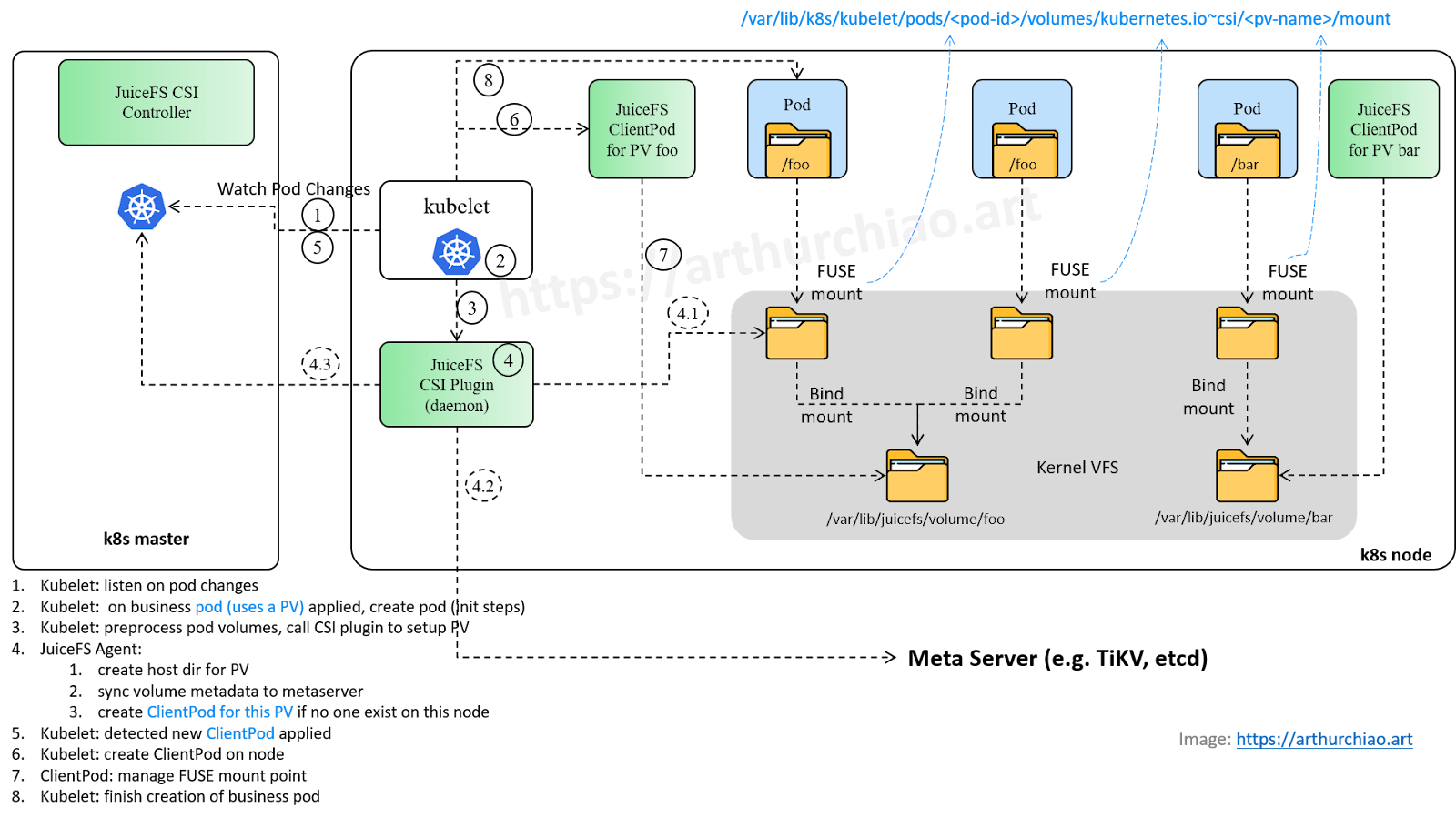

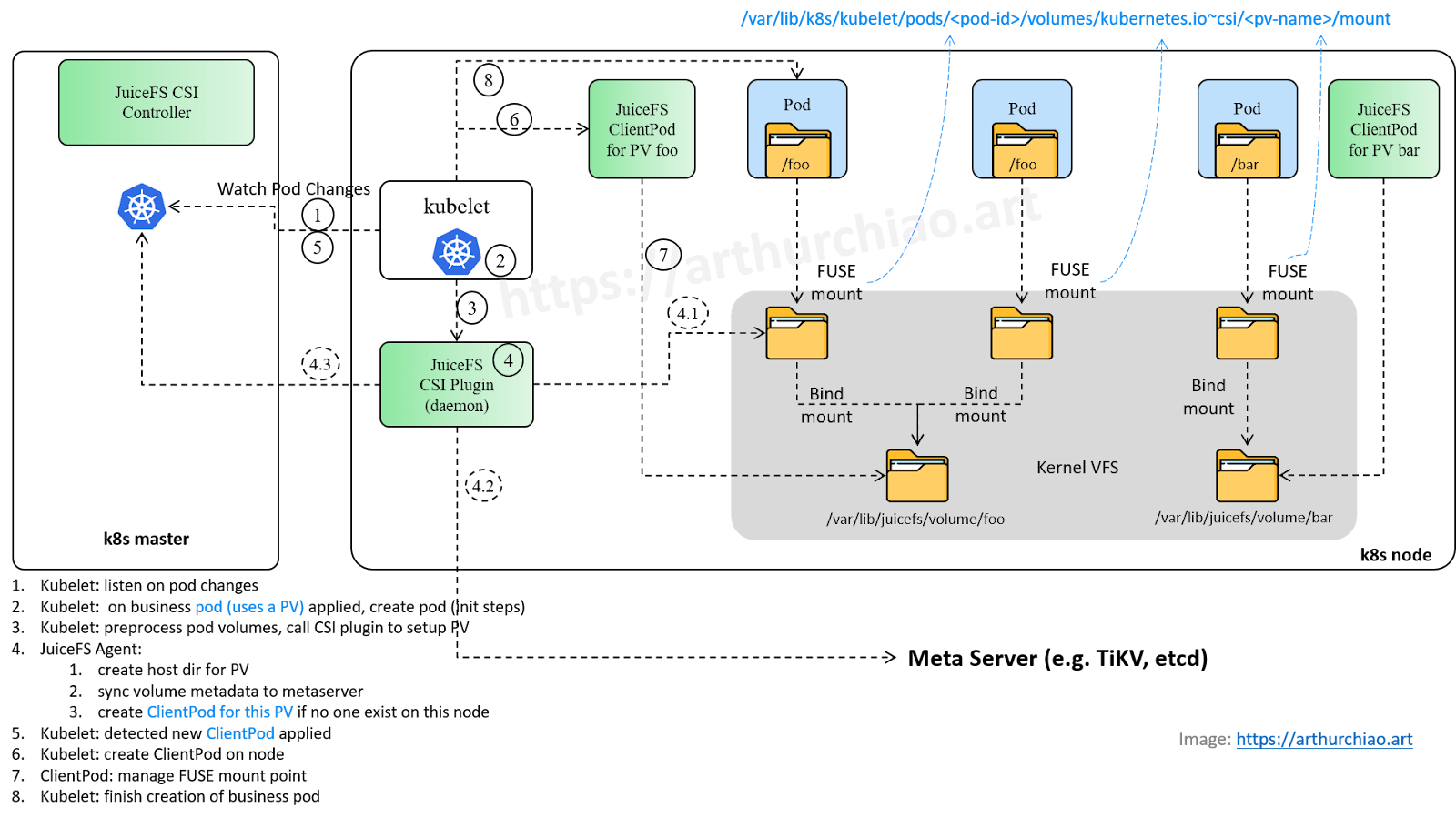

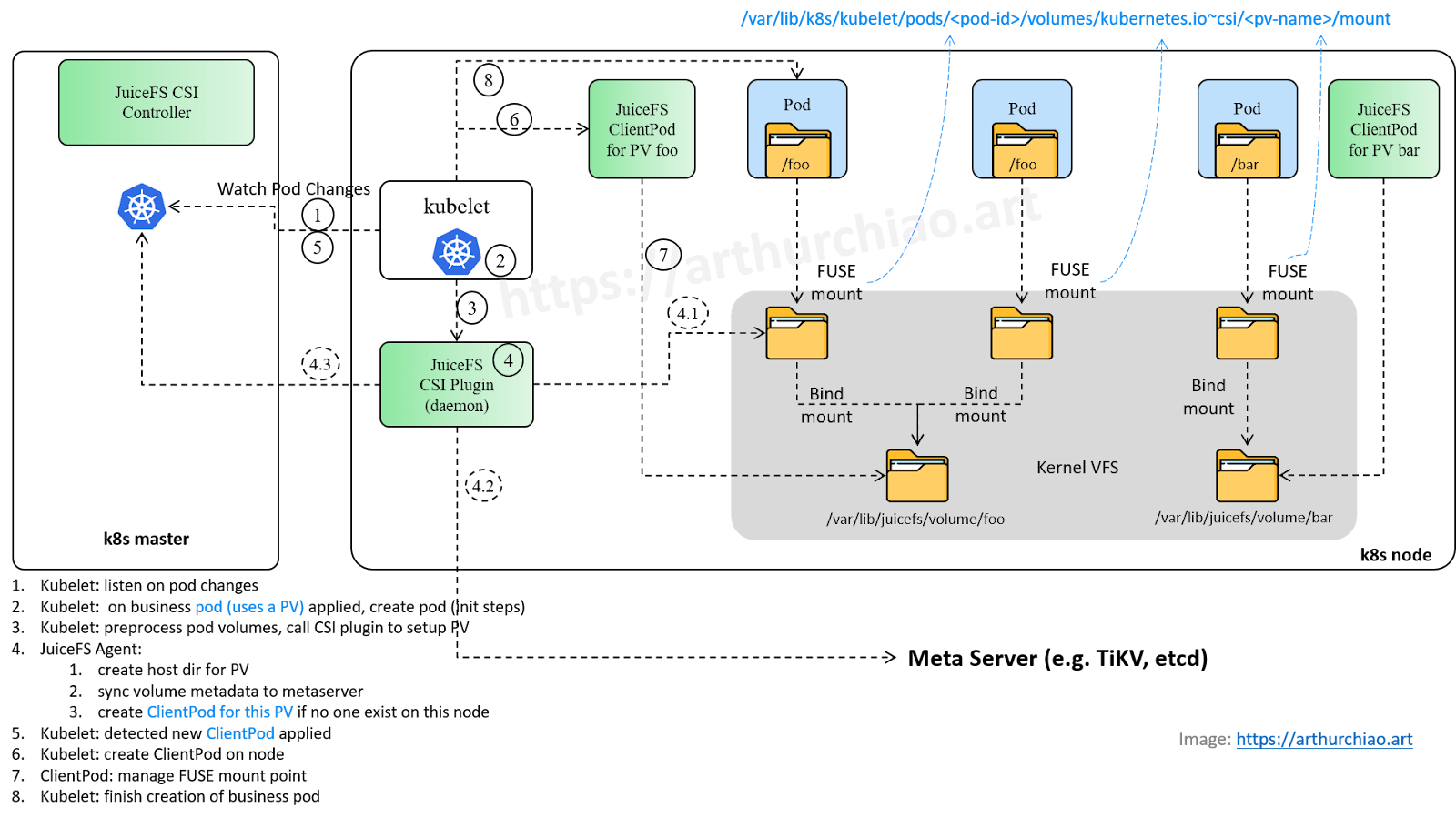

- Mount Pod mode: The CSI plugin dynamically creates a caretaker pod for each PV used by local pods on each node. This Mount Pod is per PV rather than per business pod. This means if multiple business pods use the same PV, only one Mount Pod will be created, as shown in the figure below.

- The Mount Pod contains the JuiceFS client, handling all read and write operations related to JuiceFS for the business pod. For clarity, this article will refer to the Mount Pod as a dynamic client pod or client pod.

- This is the default working mode of JuiceFS CSI.

- The Mount Pod needs to have privilege permissions for FUSE.

- If the client pod restarts, it may temporarily prevent the business pod from reading and writing until the client pod is back up.

CSI sidecar mode: A sidecar container is created for each business pod using JuiceFS PV.

- The sidecar is per-pod level.

- The sidecar is not created by the JuiceFS plugin. The CSI Controller registers a webhook that listens for container changes, automatically injecting a sidecar into the pod YAML when the pod is created. This is similar to how Istio injects the Envoy container.

- The sidecar restarts require the business pod to be rebuilt for recovery. It also requires privilege permissions for FUSE. This poses risks since each sidecar can see all devices on the node, so it's not recommended.

Next, let’s look at what happens when creating a business pod that requires a mounted PV in K8s and the actions of K8s and JuiceFS components.

What happens when creating a pod using a PV

Workflow when a business pod is created (JuiceFS Mount Pod mode):

Step 1: The kubelet starts and listens for changes in pod resources across the cluster.

As the K8s agent on each node, the kubelet listens for changes to pod resources in the K8s cluster after startup. Specifically, it receives events for pod creation, updates, and deletions from the kube-apiserver.

Step 2: The kubelet receives a business pod creation event and begins creating the pod.

Upon receiving a pod creation event, the kubelet first checks if the pod is under its management (whether the nodeName in spec matches this node). If yes, it starts creating the pod.

1. Create a business pod

kubelet.INFO contains detailed information:

10:05:57.410 Receiving a new pod "pod1(<pod1-id>)"

10:05:57.411 SyncLoop (ADD, "api"): "pod1(<pod1-id>)"

10:05:57.411 Needs to allocate 2 "nvidia.com/gpu" for pod "<pod1-id>" container "container1"

10:05:57.411 Needs to allocate 1 "our-corp.com/ip" for pod "<pod1-id>" container "container1"

10:05:57.413 Cgroup has some missing paths: [/sys/fs/cgroup/pids/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/systemd/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpuset/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/memory/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpu,cpuacct/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpu,cpuacct/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/hugetlb/kubepods/burstable/pod<pod1-id>]

10:05:57.413 Cgroup has some missing paths: [/sys/fs/cgroup/memory/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/systemd/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpu,cpuacct/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpu,cpuacct/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/hugetlb/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/pids/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpuset/kubepods/burstable/pod<pod1-id>]

10:05:57.413 Cgroup has some missing paths: [/sys/fs/cgroup/cpu,cpuacct/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/pids/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpuset/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/systemd/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/memory/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/cpu,cpuacct/kubepods/burstable/pod<pod1-id> /sys/fs/cgroup/hugetlb/kubepods/burstable/pod<pod1-id>]

10:05:57.415 Using factory "raw" for container "/kubepods/burstable/pod<pod1-id>"

10:05:57.415 Added container: "/kubepods/burstable/pod<pod1-id>" (aliases: [], namespace: "")

10:05:57.419 Waiting for volumes to attach and mount for pod "pod1(<pod1-id>)"

10:05:57.432 SyncLoop (RECONCILE, "api"): "pod1(<pod1-id>)"

10:05:57.471 Added volume "meminfo" (volSpec="meminfo") for pod "<pod1-id>" to desired state.

10:05:57.471 Added volume "cpuinfo" (volSpec="cpuinfo") for pod "<pod1-id>" to desired state.

10:05:57.471 Added volume "stat" (volSpec="stat") for pod "<pod1-id>" to desired state.

10:05:57.480 Added volume "share-dir" (volSpec="pvc-6ee43741-29b1-4aa0-98d3-5413764d36b1") for pod "<pod1-id>" to desired state.

10:05:57.484 Added volume "data-dir" (volSpec="juicefs-volume1-pv") for pod "<pod1-id>" to desired state.

...

You can see that the required resources for the pod are processed sequentially:

- Devices, such as GPUs

- IP addresses

- cgroup resource isolation configurations

- Volumes

This article focuses on volume resources.

2. Handle the volumes required by the pod.

From the logs, you can see that the business pod declares several volumes, including:

- HostPath type: Directly mounts a node path into the container.

- LXCFS type: For resolving resource view issues.

- Dynamic/static PV types: The JuiceFS volume falls into this category.

The kubelet logs:

# kubelet.INFO

10:05:57.509 operationExecutor.VerifyControllerAttachedVolume started for volume "xxx"

10:05:57.611 Starting operationExecutor.MountVolume for volume "xxx" (UniqueName: "kubernetes.io/host-path/<pod1-id>-xxx") pod "pod1" (UID: "<pod1-id>")

10:05:57.611 operationExecutor.MountVolume started for volume "juicefs-volume1-pv" (UniqueName: "kubernetes.io/csi/csi.juicefs.com^juicefs-volume1-pv") pod "pod1" (UID: "<pod1-id>")

10:05:57.611 kubernetes.io/csi: mounter.GetPath generated [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount]

10:05:57.611 kubernetes.io/csi: created path successfully [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv]

10:05:57.611 kubernetes.io/csi: saving volume data file [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/vol_data.json]

10:05:57.611 kubernetes.io/csi: volume data file saved successfully [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/vol_data.json]

10:05:57.613 MountVolume.MountDevice succeeded for volume "juicefs-volume1-pv" (UniqueName: "kubernetes.io/csi/csi.juicefs.com^juicefs-volume1-pv") pod "pod1" (UID: "<pod1-id>") device mount path "/var/lib/k8s/kubelet/plugins/kubernetes.io/csi/pv/juicefs-volume1-pv/globalmount"

10:05:57.616 kubernetes.io/csi: mounter.GetPath generated [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount]

10:05:57.616 kubernetes.io/csi: Mounter.SetUpAt(/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount)

10:05:57.616 kubernetes.io/csi: created target path successfully [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount]

10:05:57.618 kubernetes.io/csi: calling NodePublishVolume rpc [volid=juicefs-volume1-pv,target_path=/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount]

10:05:57.713 Starting operationExecutor.MountVolume for volume "juicefs-volume1-pv" (UniqueName: "kubernetes.io/csi/csi.juicefs.com^juicefs-volume1-pv") pod "pod1" (UID: "<pod1-id>")

...

10:05:59.506 kubernetes.io/csi: mounter.SetUp successfully requested NodePublish [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount]

10:05:59.506 MountVolume.SetUp succeeded for volume "juicefs-volume1-pv" (UniqueName: "kubernetes.io/csi/csi.juicefs.com^juicefs-volume1-pv") pod "pod1" (UID: "<pod1-id>")

10:05:59.506 kubernetes.io/csi: mounter.GetPath generated [/var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount]

For each volume, it executes these operations:

- The

operationExecutor.VerifyControllerAttachedVolume()method is called to perform some checks. - The

operationExecutor.MountVolume()method mounts the specified volume to the container's directory. - For CSI storage, the

NodePublishVolume()method of the CSI plugin is also called to initialize the corresponding PV, which is the case for JuiceFS.

The kubelet will continuously check whether all volumes are properly mounted; if they are not, it will not proceed to the next step (creating the sandbox container).

Step 3: kubelet --> CSI plugin (JuiceFS): Setup PV

Let’s take a closer look at the logic for initializing PV mounts in the node CSI plugin. The call stack is as follows:

gRPC NodePublishVolume()

kubelet ---------------------------> juicefs node plugin (also called "driver", etc)

Step 4: What the JuiceFS CSI plugin does

Let’s check the logs of the JuiceFS CSI node plugin directly on the machine:

(node) $ docker logs --timestamps k8s_juicefs-plugin_juicefs-csi-node-xxx | grep juicefs-volume1

10:05:57.619 NodePublishVolume: volume_id is juicefs-volume1-pv

10:05:57.619 NodePublishVolume: creating dir /var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount

10:05:57.620 ceFormat cmd: [/usr/local/bin/juicefs format --storage=OSS --bucket=xx --access-key=xx --secret-key=${secretkey} --token=${token} ${metaurl} juicefs-volume1]

10:05:57.874 Format output is juicefs <INFO>: Meta address: tikv://node1:2379,node2:2379,node3:2379/juicefs-volume1

10:05:57.874 cefs[1983] <INFO>: Data use oss://<bucket>/juicefs-volume1/

10:05:57.875 Mount: mounting "tikv://node1:2379,node2:2379,node3:2379/juicefs-volume1" at "/jfs/juicefs-volume1-pv" with options [token=xx]

10:05:57.884 createOrAddRef: Need to create pod juicefs-node1-juicefs-volume1-pv.

10:05:57.891 createOrAddRed: GetMountPodPVC juicefs-volume1-pv, err: %!s(<nil>)

10:05:57.891 ceMount: mount tikv://node1:2379,node2:2379,node3:2379/juicefs-volume1 at /jfs/juicefs-volume1-pv

10:05:57.978 createOrUpdateSecret: juicefs-node1-juicefs-volume1-pv-secret, juicefs-system

10:05:59.500 waitUtilPodReady: Pod juicefs-node1-juicefs-volume1-pv is successful

10:05:59.500 NodePublishVolume: binding /jfs/juicefs-volume1-pv at /var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount with options []

10:05:59.505 NodePublishVolume: mounted juicefs-volume1-pv at /var/lib/k8s/kubelet/pods/<pod1-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount with options []

It’s clear that the NodePublishVolume() method is executed. This method is uniquely implemented by each CSI plugin, so its functionality is closely tied to the storage solution. Let’s examine what the JuiceFS plugin does in detail.

1. Create mount path for pod PV and initialize the volume

By default, each pod corresponds to a storage path on the node:

(node) $ ll /var/lib/k8s/kubelet/pods/<pod-id>

containers/

etc-hosts

plugins/

volumes/

The JuiceFS plugin creates a corresponding subdirectory and mount point within the volumes/ directory:

/var/lib/k8s/kubelet/pods/{pod1-id}/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount

It then formats the volume using the JuiceFS command-line tool:

$ /usr/local/bin/juicefs format --storage=OSS --bucket=xx --access-key=xx --secret-key=${secretkey} --token=${token} ${metaurl} juicefs-volume1

2. Write volume mount information to Meta Server

The mount information is synchronized with JuiceFS' Meta Server, which is TiKV in this example:

3. JuiceFS plugin: Create a client pod if it doesn’t exist

The JuiceFS CSI plugin checks whether a client pod exists on the node for the PV. If it doesn't, the plugin creates one; if it does, no further action is required.

When the last business pod using a PV is destroyed, the corresponding client pod is automatically removed by the JuiceFS CSI plugin.

In our environment, we use a dynamic client pod approach, so we see the following logs:

(node) $ docker logs --timestamps <csi plugin container> | grep

...

10:05:57.884 createOrAddRef: Need to create pod juicefs-node1-juicefs-volume1-pv.

10:05:57.891 createOrAddRed: GetMountPodPVC juicefs-volume1-pv, err: %!s(<nil>)

10:05:57.891 ceMount: mount tikv://node1:2379,node2:2379,node3:2379/juicefs-volume1 at /jfs/juicefs-volume1-pv

10:05:57.978 createOrUpdateSecret: juicefs-node1-juicefs-volume1-pv-secret, juicefs-system

10:05:59.500 waitUtilPodReady:

The JuiceFS node plugin creates a dynamic client pod named juicefs-{node}-{volume}-pv in K8s.

Step 5: The kubelet listens for client pod creation events

At this point, the business pod hasn’t been created yet, but its corresponding JuiceFS client pod is requesting creation:

(node) $ grep juicefs-<node>-<volume>-pv /var/log/kubernetes/kubelet.INFO | grep "received "

10:05:58.288 SyncPod received new pod "juicefs-node1-volume1-pv_juicefs-system", will create a sandbox for it

The process for creating the JuiceFS dynamic client pod follows next. Without the client pod, the business pod cannot read or write to the JuiceFS volume even if it’s running.

Step 6: The kubelet creates a client pod

The process for creating the client pod is similar to that of the business pod, but it’s easier. We’ll skip the details, assuming it starts up directly.

To check the processes running in this client pod:

(node) $ dk top k8s_jfs-mount_juicefs-node1-juicefs-volume1-pv-xx

/bin/mount.juicefs ${metaurl} /jfs/juicefs-volume1-pv -o enable-xattr,no-bgjob,allow_other,token=xxx,metrics=0.0.0.0:9567

/bin/mount.juicefs is merely an alias pointing to the juicefs executable file:

(pod) $ ls -ahl /bin/mount.juicefs

/bin/mount.juicefs -> /usr/local/bin/juicefs

Step 7: Client pod initialization and FUSE mount

Let’s see what this client pod does:

root@node:~ # dk top k8s_jfs-mount_juicefs-node1-juicefs-volume1-pv-xx

<INFO>: Meta address: tikv://node1:2379,node2:2379,node3:2379/juicefs-volume1

<INFO>: Data use oss://<oss-bucket>/juicefs-volume1/

<INFO>: Disk cache (/var/jfsCache/<id>/): capacity (10240 MB), free ratio (10%), max pending pages (15)

<INFO>: Create session 667 OK with version: admin-1.2.1+2022-12-22.34c7e973

<INFO>: listen on 0.0.0.0:9567

<INFO>: Mounting volume juicefs-volume1 at /jfs/juicefs-volume1-pv ...

<INFO>: OK, juicefs-volume1 is ready at /jfs/juicefs-volume1-pv

This initializes the local volume configuration, interacts with the Meta Server, and exposes Prometheus metrics. Using JuiceFS' own mount implementation (/bin/mount.juicefs mentioned earlier), it mounts the volume at /jfs/juicefs-volume1-pv, typically corresponding to /var/lib/juicefs/volume/juicefs-volume1-pv.

At this point, you can observe the following mount information on the node:

(node) $ cat /proc/mounts | grep JuiceFS:juicefs-volume1

JuiceFS:juicefs-volume1 /var/lib/juicefs/volume/juicefs-volume1-pv fuse.juicefs rw,relatime,user_id=0,group_id=0,default_permissions,allow_other 0 0

JuiceFS:juicefs-volume1 /var/lib/k8s/kubelet/pods/<pod-id>/volumes/kubernetes.io~csi/juicefs-volume1-pv/mount fuse.juicefs rw,relatime,user_id=0,group_id=0,default_permissions,allow_other 0 0

This shows that it’s mounted using the fuse.juicefs method.

After this dynamic client pod is created, any read or write operations by the business pod (which still does not exist) will go through the FUSE module and be forwarded to the user-space JuiceFS client for processing. The JuiceFS client implements corresponding read and write methods for different object stores.

Step 8: The kubelet creates a business pod

Now that all volumes required by the pod have been processed. The kubelet will log:

# kubelet.INFO

10:06:06.119 All volumes are attached and mounted for pod "pod1(<pod1-id>)"

It can then proceed to create the business pod:

# kubelet.INFO

10:06:06.119 No sandbox for pod "pod1(<pod1-id>)" can be found. Need to start a new one

10:06:06.119 Creating PodSandbox for pod "pod1(<pod1-id>)"

10:06:06.849 Created PodSandbox "885c3a" for pod "pod1(<pod1-id>)"

...

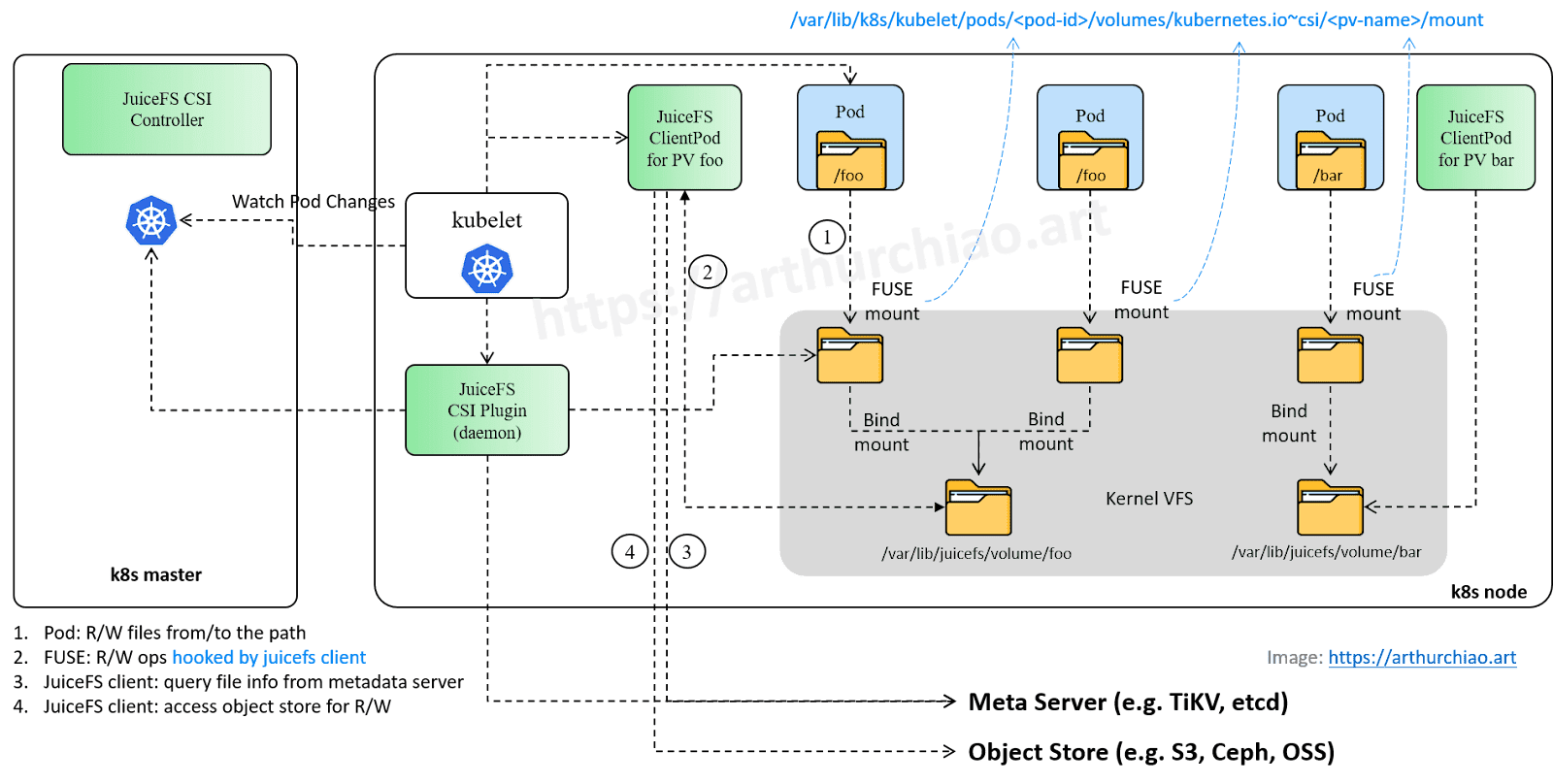

How the business pod reads and writes to JuiceFS volume

The JuiceFS dynamic client pod is created before the business pod, allowing the business pod to read and write to the JuiceFS PV (volume) immediately upon creation.

This process can be divided into four steps.

Step 1: The pod reads/writes to files

For example, within the pod, navigate to the volume path (for example, cd /data/juicefs-pv-dir/) and perform operations like ls and find.

Step 2: R/W requests hooked by FUSE module and forwarded to the JuiceFS client

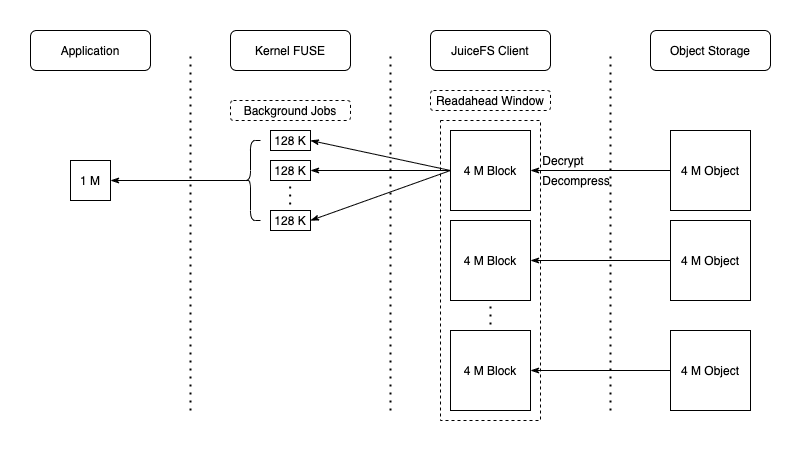

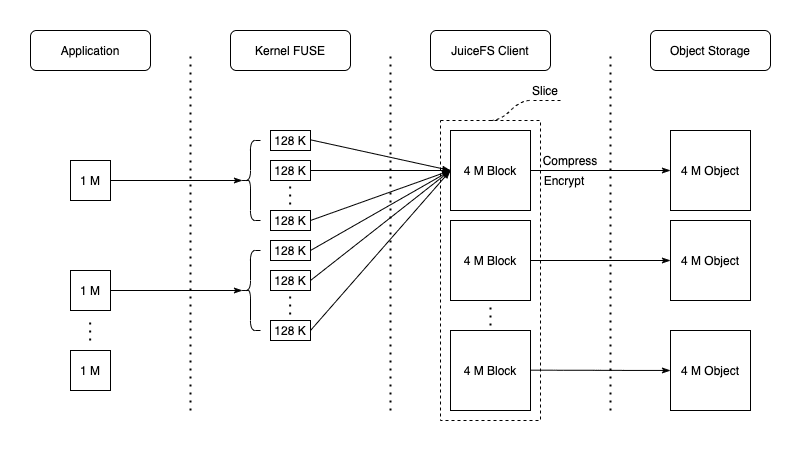

Here are two diagrams for illustration: Read operations:

Write operations:

Step 3: The JuiceFS client pod reads metadata from Meta Server

The figure above has revealed some of JuiceFS' metadata design, such as chunk, slice, and block. During read and write operations, the client will interact with meta information related to Meta Server.

Step 4: The JuiceFS client pod reads/writes to files from object store

In this step, files are read from and written to an object store like S3.

Summary

This article outlined the process of creating a pod with a PV using JuiceFS as a K8s CSI plugin and how the pod interacts with the PV. Many details have been omitted due to space constraints. If you have any questions for this article, feel free to join JuiceFS discussions on GitHub and community on Slack.