TL;DR:

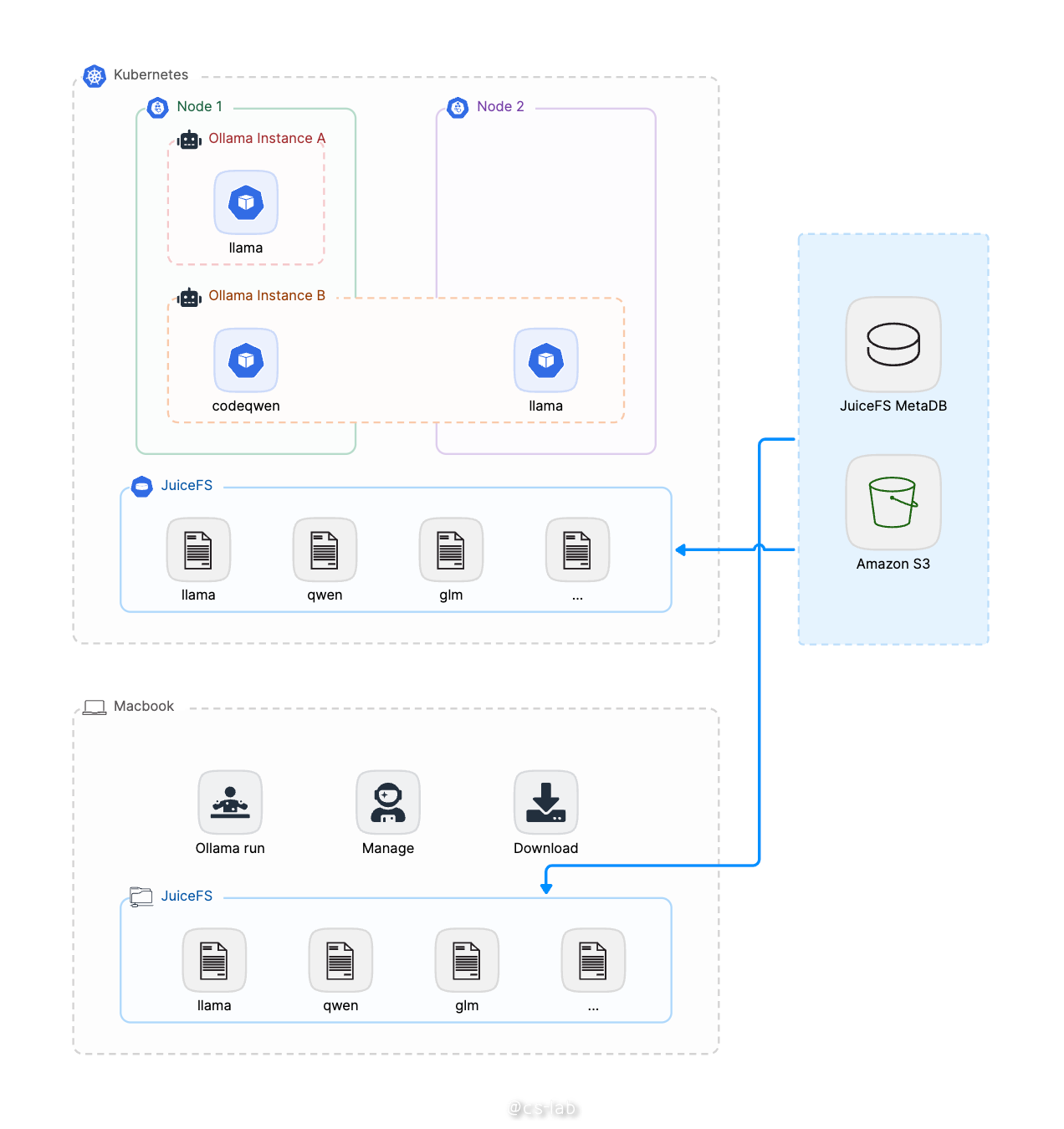

When loading large language models using Ollma, the author experimented with JuiceFS for model sharing. JuiceFS' data warm-up and distributed caching features significantly improved loading efficiency and optimized performance bottlenecks.

With the development of AI technology, foundation models are gradually influencing our daily lives. Commercial large language models (LLMs) often feel distant from users due to costs, black-box nature, and data security concerns. As a result, more and more LLMs are becoming open source, allowing users to use their own models more easily and securely.

Ollama is a tool that simplifies the deployment and execution of LLMs. On one hand, it provides a Docker-like experience, where running a model instance is as simple as starting a container. On the other hand, it offers an OpenAI-compatible API that smooths out the differences between various models.

To avoid using “artificial stupidity,” we opt for large-scale models. However, as widely known, while larger models, such as Llama 3.1 70B with a size of 40 GB, deliver superior performance, they also present significant challenges in file management. Generally, there are two main approaches: model artifacting and shared storage.

- Model artifacting involves packaging the LLM into deployable artifacts, such as Docker images or OS snapshots. It uses IaaS or PaaS for version management and distribution. This method is akin to a hot start, where the model is already on-site when the instance is ready, but the challenge lies in the distribution of large files. Despite advancements in software engineering, large artifact distribution methods are limited.

- Shared storage is like a cold start. While the model files are available on a remote system, they need to be loaded at runtime. Although this approach aligns well with intuition, it heavily depends on the performance of the shared storage itself, which could become a bottleneck during loading.

However, if shared storage supports features like data warm-up and distributed caching, the situation improves significantly. JuiceFS is an example of such a project, combining shared storage with these hot start techniques.

This article demonstrates how to use JuiceFS shared storage with a demo, using JuiceFS’ distributed file system capabilities to enable "pull once, run anywhere" for Ollama model files.

Pull once

This section uses a Linux server as an example to demonstrate how to pull a model.

Prepare the JuiceFS file system

By default, Ollama stores model data in /root/.ollama. To integrate JuiceFS, mount it at /root/.ollama:

$ juicefs mount weiwei /root/.ollama --subdir=ollama

This ensures that the models pulled by Ollama are stored within the JuiceFS file system.

Pull the model

Install Ollama:

curl -fsSL https://ollama.com/install.sh | sh

Pull the model, for example, Llama 3.1 8B:

$ ollama pull llama3.1

pulling manifest

pulling 8eeb52dfb3bb... 100% ▕█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 4.7 GB

pulling 11ce4ee3e170... 100% ▕█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 1.7 KB

pulling 0ba8f0e314b4... 100% ▕█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 12 KB

pulling 56bb8bd477a5... 100% ▕█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 96 B

pulling 1a4c3c319823... 100% ▕█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

removing any unused layers

success

Create your own model using a Modelfile, similar to a Dockerfile. Here, we base it on Llama 3.1 and set a system prompt:

$ cat <<EOF > Modelfile

> FROM llama3.1

# set the temperature to 1 [higher is more creative, lower is more coherent]

PARAMETER temperature 1

# set the system message

SYSTEM """

You are a literary writer named Rora. Please help me polish my writing.

"""

> EOF

$

$ ollama create writer -f ./Modelfile

transferring model data

using existing layer sha256:8eeb52dfb3bb9aefdf9d1ef24b3bdbcfbe82238798c4b918278320b6fcef18fe

using existing layer sha256:11ce4ee3e170f6adebac9a991c22e22ab3f8530e154ee669954c4bc73061c258

using existing layer sha256:0ba8f0e314b4264dfd19df045cde9d4c394a52474bf92ed6a3de22a4ca31a177

creating new layer sha256:1dfe258ba02ecec9bf76292743b48c2ce90aefe288c9564c92d135332df6e514

creating new layer sha256:7fa4d1c192726882c2c46a2ffd5af3caddd99e96404e81b3cf2a41de36e25991

creating new layer sha256:ddb2d799341563f3da053b0da259d18d8b00b2f8c5951e7c5e192f9ead7d97ad

writing manifest

success

Check the model list:

$ ollama list

NAME ID SIZE MODIFIED

writer:latest 346a60dbd7d4 4.7 GB 17 minutes ago

llama3.1:latest 91ab477bec9d 4.7 GB 4 hours ago

Run anywhere

With the JuiceFS file system containing the pulled model, you can mount it elsewhere and run it directly. Below are examples for Linux, Mac, and Kubernetes.

Linux

On a server with JuiceFS mounted, you can run the model directly:

$ ollama run writer

>>> The flower is beautiful

A lovely start, but let's see if we can't coax out a bit more poetry from your words. How about this:

"The flower unfolded its petals like a gentle whisper, its beauty an unassuming serenade that drew the eye and stirred the soul."

Or, perhaps a slightly more concise version:

"In the flower's delicate face, I find a beauty that soothes the senses and whispers secrets to the heart."

Your turn! What inspired you to write about the flower?

Mac

Mount JuiceFS:

weiwei@hdls-mbp ~ juicefs mount weiwei .llama --subdir=ollama

.OK, weiwei is ready at /Users/weiwei/.llama.

Click the link to install Ollama.

Note: Since the model was pulled as root, switch to root to run Ollama.

If using a manually created writer model, there's an issue. The layer of the newly created model is initially written with permissions set to 600. You need to manually change it to 644 for the model to run on Mac. This is a bug in Ollama. I’ve submitted a pull request to Ollama and it has been merged, but a new version has not yet been released. The temporary workaround is as follows:

hdls-mbp:~ root# cd /Users/weiwei/.ollama/models/blobs

hdls-mbp:blobs root# ls -alh . | grep rw-------

-rw------- 1 root wheel 14B 8 15 23:04 sha256-804a1f079a1166190d674bcfb0fa42270ec57a4413346d20c5eb22b26762d132

-rw------- 1 root wheel 559B 8 15 23:04 sha256-db7eed3b8121ac22a30870611ade28097c62918b8a4765d15e6170ec8608e507

hdls-mbp:blobs root#

hdls-mbp:blobs root# chmod 644 sha256-804a1f079a1166190d674bcfb0fa42270ec57a4413346d20c5eb22b26762d132 sha256-db7eed3b8121ac22a30870611ade28097c62918b8a4765d15e6170ec8608e507

hdls-mbp:blobs root#

hdls-mbp:blobs root#

hdls-mbp:blobs root#

hdls-mbp:blobs root# ollama list

NAME ID SIZE MODIFIED

writer:latest 346a60dbd7d4 4.7 GB About an hour ago

llama3.1:latest 91ab477bec9d 4.7 GB 4 hours ago

Run the writer model and let it polish the text:

hdls-mbp:weiwei root# ollama run writer

>>> The tree is very tall

A great start, but let's see if we can make it even more vivid and engaging.

Here's a revised version:

"The tree stood sentinel, its towering presence stretching towards the sky like a verdant giant, its branches dancing

in the breeze with an elegance that seemed almost otherworldly."

Or, if you'd prefer something simpler yet still evocative, how about this:

"The tree loomed tall and green, its trunk sturdy as a stone pillar, its leaves a soft susurrus of sound in the gentle

wind."

Which one resonates with you? Or do you have any specific ideas or feelings you want to convey through your writing

that I can help shape into a compelling phrase?

Kubernetes

JuiceFS CSI Driver allows users to directly use Persistent Volumes (PVs) in Kubernetes. It supports both static and dynamic configurations. Since we’re using existing files in the file system, we use static configuration here.

Prepare the PVC and PV:

apiVersion: v1

kind: PersistentVolume

metadata:

name: ollama-vol

labels:

juicefs-name: ollama-vol

spec:

capacity:

storage: 10Pi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

csi:

driver: csi.juicefs.com

volumeHandle: ollama-vol

fsType: juicefs

nodePublishSecretRef:

name: ollama-vol

namespace: kube-system

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ollama-vol

namespace: default

spec:

accessModes:

- ReadWriteMany

volumeMode: Filesystem

resources:

requests:

storage: 10Gi

selector:

matchLabels:

juicefs-name: ollama-vol

Deploy Ollama:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ollama

labels:

app: ollama

spec:

replicas: 1

selector:

matchLabels:

app: ollama

template:

metadata:

labels:

app: ollama

spec:

containers:

- image: registry.cn-hangzhou.aliyuncs.com/hdls/ollama:0.3.5

env:

- name: OLLAMA_HOST

value: "0.0.0.0"

ports:

- name: ollama

containerPort: 11434

args:

- "serve"

name: ollama

volumeMounts:

- mountPath: /root/.ollama

name: shared-data

subPath: ollama

volumes:

- name: shared-data

persistentVolumeClaim:

claimName: ollama-vol

---

apiVersion: v1

kind: Service

metadata:

name: ollama-svc

spec:

selector:

app: ollama

ports:

- name: http

protocol: TCP

port: 11434

targetPort: 11434

Since the Ollama deployment sets up an Ollama server, you can access it via the API:

$ curl http://192.168.203.37:11434/api/generate -d '{

"model": "writer",

"prompt": "The sky is blue",

"stream": false

}'

{"model":"writer","created_at":"2024-08-15T14:35:43.593740142Z","response":"A starting point, at least! Let's see... How about we add some depth to this sentence? Here are a few suggestions:\n\n* Instead of simply stating that the sky is blue, why not describe how it makes you feel? For example: \"As I stepped outside, the cerulean sky seemed to stretch out before me like an endless canvas, its vibrant hue lifting my spirits and washing away the weight of the world.\"\n* Or, we could add some sensory details to bring the scene to life. Here's an example: \"The morning sun had just risen over the horizon, casting a warm glow across the blue sky that seemed to pulse with a gentle light – a softness that soothed my skin and lulled me into its tranquil rhythm.\"\n* If you're going for something more poetic, we could try to tap into the symbolic meaning of the sky's color. For example: \"The blue sky above was like an open door, inviting me to step through and confront the dreams I'd been too afraid to chase – a reminder that the possibilities are endless, as long as we have the courage to reach for them.\"\n\nWhich direction would you like to take this?","done":true,"done_reason":"stop","context":[128006,9125,128007,1432,2675,527,264,32465,7061,7086,432,6347,13,5321,1520,757,45129,856,4477,627,128009,128006,882,128007,271,791,13180,374,6437,128009,128006,78191,128007,271,32,6041,1486,11,520,3325,0,6914,596,1518,1131,2650,922,584,923,1063,8149,311,420,11914,30,5810,527,264,2478,18726,1473,9,12361,315,5042,28898,430,279,13180,374,6437,11,3249,539,7664,1268,433,3727,499,2733,30,1789,3187,25,330,2170,358,25319,4994,11,279,10362,1130,276,13180,9508,311,14841,704,1603,757,1093,459,26762,10247,11,1202,34076,40140,33510,856,31739,323,28786,3201,279,4785,315,279,1917,10246,9,2582,11,584,1436,923,1063,49069,3649,311,4546,279,6237,311,2324,13,5810,596,459,3187,25,330,791,6693,7160,1047,1120,41482,927,279,35174,11,25146,264,8369,37066,4028,279,6437,13180,430,9508,311,28334,449,264,22443,3177,1389,264,8579,2136,430,779,8942,291,856,6930,323,69163,839,757,1139,1202,68040,37390,10246,9,1442,499,2351,2133,369,2555,810,76534,11,584,1436,1456,311,15596,1139,279,36396,7438,315,279,13180,596,1933,13,1789,3187,25,330,791,6437,13180,3485,574,1093,459,1825,6134,11,42292,757,311,3094,1555,323,17302,279,19226,358,4265,1027,2288,16984,311,33586,1389,264,27626,430,279,24525,527,26762,11,439,1317,439,584,617,279,25775,311,5662,369,1124,2266,23956,5216,1053,499,1093,311,1935,420,30],"total_duration":13635238079,"load_duration":39933548,"prompt_eval_count":35,"prompt_eval_duration":55817000,"eval_count":240,"eval_duration":13538816000}

Summary

Ollama is a tool that simplifies running LLMs locally. It allows you to pull models to your local machine and run them with simple commands. JuiceFS can serve as the underlying storage for a model registry. Its distributed nature enables users to pull a model once and use it in various locations, achieving the goal of "pull once, run anywhere."

If you have any questions for this article, feel free to join JuiceFS discussions on GitHub and community on Slack.