As a developer-oriented AI platform, Lepton AI delivers scalable infrastructure that simplifies AI workflows. Our GPU-optimized architecture enables seamless deployment of inference services and training tasks, dynamically scaling resources to meet fluctuating demands.

When it comes to storage infrastructure, we sought a suitable vendor to ensure efficient product development. JuiceFS, an open-source high-performance distributed file system, proved to be an excellent fit for our needs. Using JuiceFS' high-performance caching mechanism, we significantly accelerated file operations and minimized latency issues caused by object storage. Compared to the previously used Amazon Elastic File System (EFS), our storage costs have been reduced by 96.7% to 98%.

JuiceFS now supports on-demand reading and caching of existing data stored in object storage, eliminating the need to pre-copy customer data. This enhancement has allowed us to efficiently manage billions of files while consistently delivering high throughput and low latency.

In this post, we’ll deep dive into our storage requirements and architectural design, discuss why we chose JuiceFS over Amazon EFS, and share best practices for using JuiceFS in K8s.

Storage requirements of the Lepton AI platform

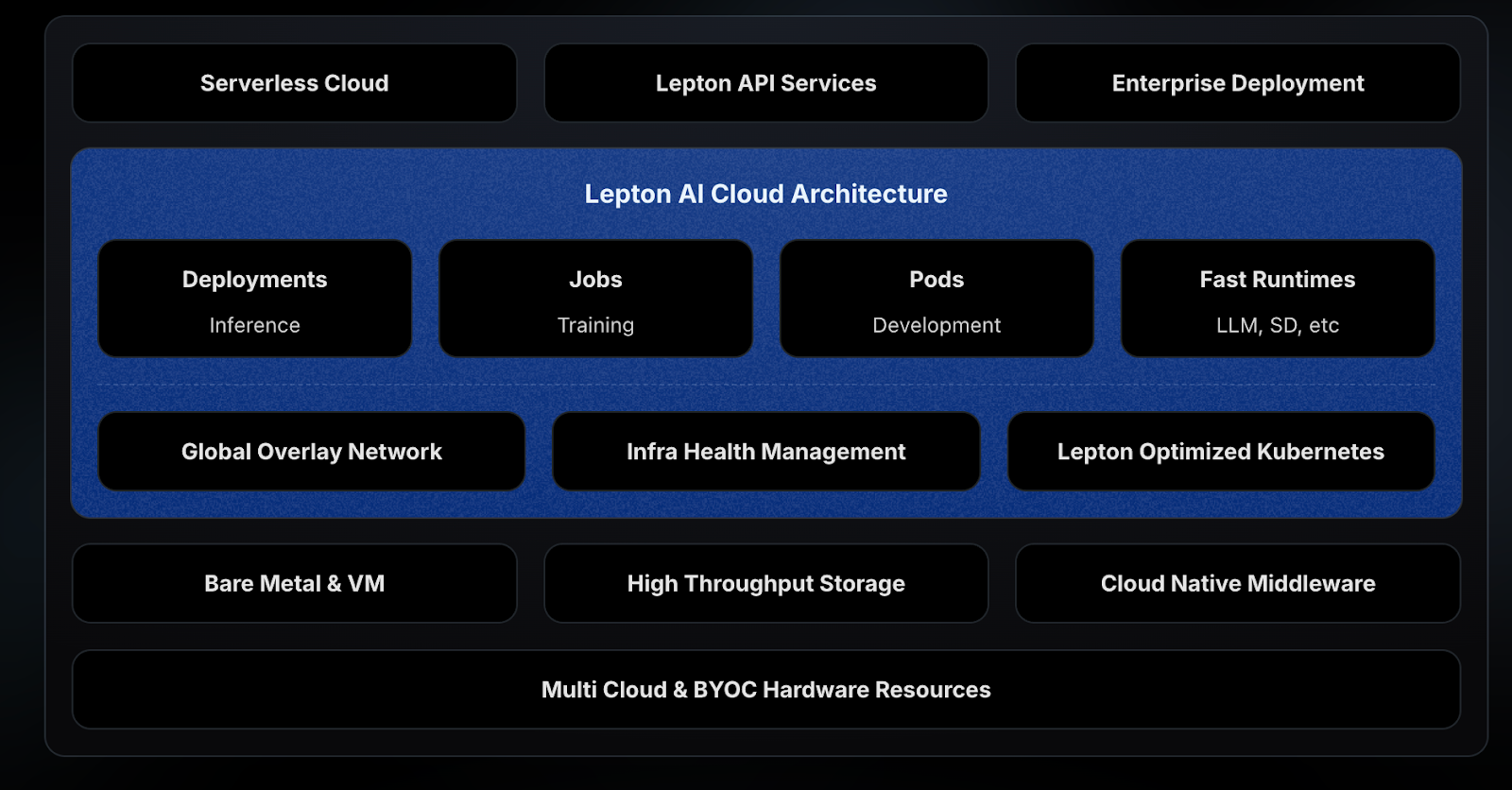

The figure below shows the Lepton AI platform architecture:

The user data on our platform has three categories:

- Training data: This includes large volumes of images that are primarily read-only. During training, a subset of this data is read for model training.

- Snapshot data: Users save intermediate results during training to resume progress in case of failures. For example, if a GPU failure occurs, users can recover from the latest snapshot and continue training. Snapshot data is typically write-heavy, with minimal read operations.

- Trained model data: This data is primarily read after the training is completed. Once the write operation is finished, it’s rarely modified. As a result, this type of data involves mostly writes, frequent reads, and minimal modifications.

These requirements pose the following challenges for the storage system:

- Handling unstable GPUs: Our customers primarily use H100 GPUs, which are prone to instability. If a GPU fails, the entire machine must be taken offline for repairs, such as replacing the GPU card or other related components. During repairs, the machine cannot access its disk, unlike traditional distributed storage systems. This creates challenges with data movement and backup, increasing the risk of data loss.

- Data migration: Many customers require large-scale data migration to our system. However, directly copying massive datasets, especially when involving billions of small files, is impractical. For example, a customer once had billions of files, and the copying process was too slow. Since this lengthy migration deters customers from trying the platform, most prefer to test the platform first before committing to a full migration.

- Multi-tenancy: In a multi-tenant environment, customers' GPUs are distributed across different locations and data centers, such as US East, US West, and Europe. This geographical spread increases latency and complicates multi-tenant management, including data isolation, access control, and latency issues.

- Performance with billions of small files: Storing and accessing billions of small files can severely impact read and write performance. Since each small file has a short access time, the storage system needs to handle a massive number of file operations. This greatly reduces overall read and write efficiency. Therefore, efficiently managing the storage and access of billions of small files has become a major challenge in system design.

Storage architecture design and selection

Storage architecture design

To address these challenges, our ideal storage architecture includes the following:

- Cloud storage with local caching: Data is stored in the cloud, and local disks are used as distributed caches. This prevents GPU failures from causing disk inaccessibility, enhancing system stability and performance.

- Support for multi-tenancy: Separate file systems are created for each customer, allowing performance tuning and simplified security management within each system. Each file system is isolated, ensuring robust security.

- Enhanced read performance: Local disks serve as distributed caches to boost read performance.

- POSIX. API compatibility: The storage must support POSIX APIs, enabling users to use existing code without modifications for data access on our platform.

After evaluating open-source systems and service providers, we found JuiceFS to be a perfect match for our requirements. JuiceFS demonstrated stable performance and cost-effectiveness.

Cost comparison: Amazon EFS vs. JuiceFS

Initially, we used Amazon EFS and compared it to JuiceFS in terms of cost. JuiceFS stores data in object storage, which is approximately one-tenth the price of EFS.

For data transfer, EFS incurs fixed internal fees within AWS, with additional charges for data exiting AWS. However, as our GPUs are primarily located in external rented data centers rather than AWS, EFS’ data transfer cost became very high.

JuiceFS allows us to choose backend object storage providers like Backblaze and Cloudflare, which do not charge for data transfers. This choice reduced our overall cost by 96.7% to 98% compared to EFS.

Best practices for using JuiceFS in K8s

Distributed Cache: CSI vs. hostPath

JuiceFS supports both CSI and hostPath configurations. To address the distributed cache issue, if CSI is used, the client runs within a Kubernetes Pod, and when the Pod's lifecycle ends, the cached data will no longer be accessible. Therefore, we found that using ·hostPath· is more appropriate.

We mounted JuiceFS to a specific path on the GPU machines and set up distributed caching. This way, when Kubernetes starts a Pod, the Pod can directly mount that path and access the data in JuiceFS. At the same time, we also implemented isolation measures for the multi-tenant environment when starting the Pods.

(Editor's note: JuiceFS has developed the JuiceFS Operator, which allows for the independent deployment of a distributed caching cluster in a K8s cluster.)

Mounting JuiceFS on hosts

We created a custom resource definition (CRD) in Kubernetes called LeptonNodeGroup, representing a GPU cluster. Unlike the Kubernetes cluster, its functionality is smaller. For example, a customer rents 1,000 GPU cards on our platform, and these cards are located in the same data center. We create a separate Custom Resource (CR) for these 1,000 cards. It stores the configuration of these GPUs, including the relevant JuiceFS configuration.

We used a DaemonSet to mount JuiceFS. Each Pod in this DaemonSet runs in privileged mode and monitors the LeptonNodeGroup. When it detects a change in the JuiceFS configuration within the LeptonNodeGroup, the Pod uses the setns system call to enter the node's namespace and mounts JuiceFS via systemd. We’ve been running this setup for over a year, and the system has proven to be stable and easy to use, with overall good performance, except for a few minor issues.

Handling billions of small files

As mentioned earlier, we need to handle a large number of small files, and several clients have provided billions of small files. While we know that using larger files would be more efficient, customer requirements force us to handle these small files, and we cannot change the data format provided by the clients. After testing, we found that directly copying these files into JuiceFS would be nearly impossible to complete within a reasonable time frame.

JuiceFS provides an import feature that allows us to directly import data from the small files originally stored by clients on S3, and this process is very fast. However, for handling billions of files, this speed is still not fast enough. The process of importing file names from S3 might take a full day, although with some optimization, we’ve managed to reduce the time to about ten hours. Without any optimization, the entire process could take 3 to 5 days.

To address this issue, we’ve made efforts to deploy JuiceFS' metadata service in the same data center as our GPU machines to reduce latency. We spent some time resolving the instability issues with our GPU machines, as we couldn’t rely solely on CPU machines within our own cluster. As a result, we decided to lease machines from other vendors. By adjusting the network topology, we were eventually able to find suitable machines in the same data center. Although these machines are not connected via an internal network, their performance and latency are still very low, which meets our requirements.

In addition, after discussing with the JuiceFS team, they implemented a lazy load feature, which allows files to be used directly without first loading all the files and metadata during the import process. This feature was introduced only in the past few months. We haven’t conducted detailed testing yet, but our initial impressions are that it can significantly improve efficiency.

Performance

We‘ve been using JuiceFS for over a year, and the overall experience has been positive. Although we haven’t conducted detailed performance testing, customer feedback has been quite satisfactory. The GPU cluster we used for performance testing has 32 nodes, each with 8 H100 GPUs, for a total of 256 GPUs.

When reading data from a single machine, the performance reaches 2 GB/s. This performance is mainly limited by the machine’s bandwidth, which is 40 Gbps. However, we’re running some other tasks, so the actual speed is around 2 GB/s.

In terms of latency, the P99 latency is typically kept under 10 milliseconds, with an average latency of about 3 to 5 milliseconds. Accessing the metadata server adds about 2 milliseconds of latency. For training purposes, this latency is generally acceptable, and users can read data smoothly.

Regarding write performance, we can achieve 1 GB/s, but it can occasionally be constrained by the object storage latency and throughput limits.

Future plans

We plan to continue optimizing the storage process and further automate existing operations to support more multi-tenant users. Some processes have already been automated, but there are still manual operations. For example, creating buckets in object storage is currently done manually, while some steps in creating file systems in JuiceFS have already been automated. We need to further enhance our automation process.

After using JuiceFS, we’re facing challenges with the stability of distributed caching. Since GPU failures require machines to be taken offline for repairs, this leads to the need for data rebalancing. To avoid data rebalancing, we need to store data in multiple copies. However, storing multiple copies consumes more disk space and may sometimes lead to space shortages. We’re still exploring solutions to this issue.

If you have any questions for this article, feel free to join JuiceFS discussions on GitHub and community on Slack.