Recently, we conducted performance tests on JuiceFS clients for Windows in a cloud rendering scenario, scaling from 100 to 3,000 nodes. The test focused on pre-rendering tasks—executing all storage-related operations before the actual rendering process.

Compared to real-world usage, this test adopted more stringent conditions by utilizing datasets with a high volume of small files. The target was for 1,000 nodes to complete tasks within an average of 30 minutes. Previously, customers used Server Message Block (SMB) services, but performance degraded significantly beyond 2,000 nodes due to network bandwidth and load balancing limitations. In contrast, JuiceFS demonstrated stable performance even with 3,000 concurrent rendering tasks, achieving an average completion time of 22 minutes and 22 seconds.

The JuiceFS client for Windows (beta) is now integrated into Community Edition 1.3 and Enterprise Edition 5.2. For details on the optimization journey, see JuiceFS on Windows: Challenges in the Beta Release. We encourage users to test it and provide feedback for further improvements.

Use case and resource configuration

File size distribution in the rendering scenario

The test dataset contained 65,113 files, with 95.77% under 128 KB. Compared to routine rendering scenarios, this test specifically increased the proportion of small files to evaluate performance under more stringent conditions:

- ≤1K: 11,053 files

- 1K~4K: 1,804 files

- 4K~128K: 49,504 files

- 128K~1M: 1,675 files

- -> 1M: 1,077 files

Hardware configuration:

| Component | CPU cores | Memory | Network | Disk storage |

|---|---|---|---|---|

| JuiceFS metadata nodes (×3) | 32 cores | 60 GB | 10 Gbps | 500 GB |

| JuiceFS cache nodes (×8) | 104 cores | 200 GB | 10 Gbps | 2 TB × 5 |

| Clients for Windows (×N) | 8 cores | 16 GB | 10 Gbps | 500 GB |

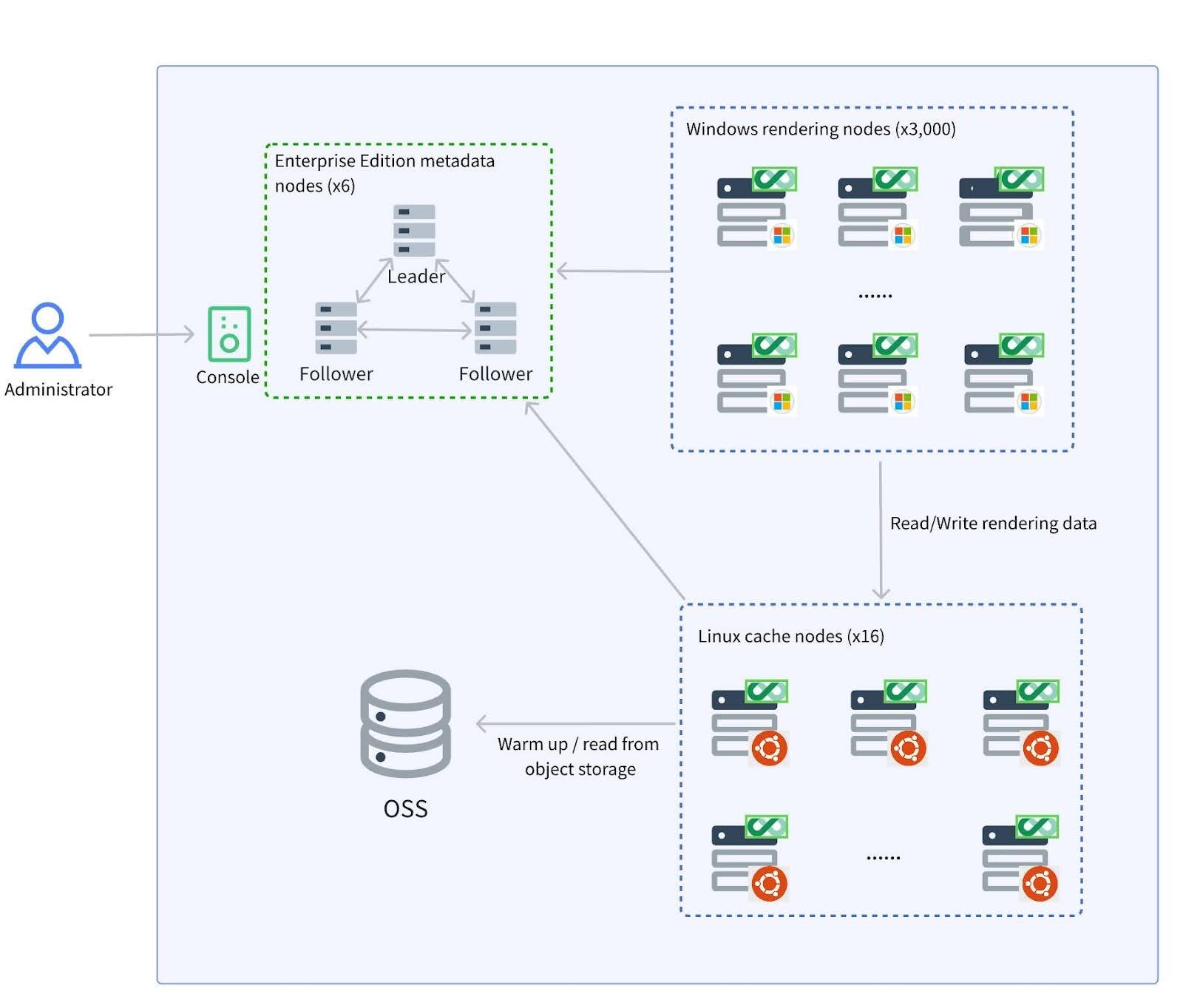

Deployment architecture

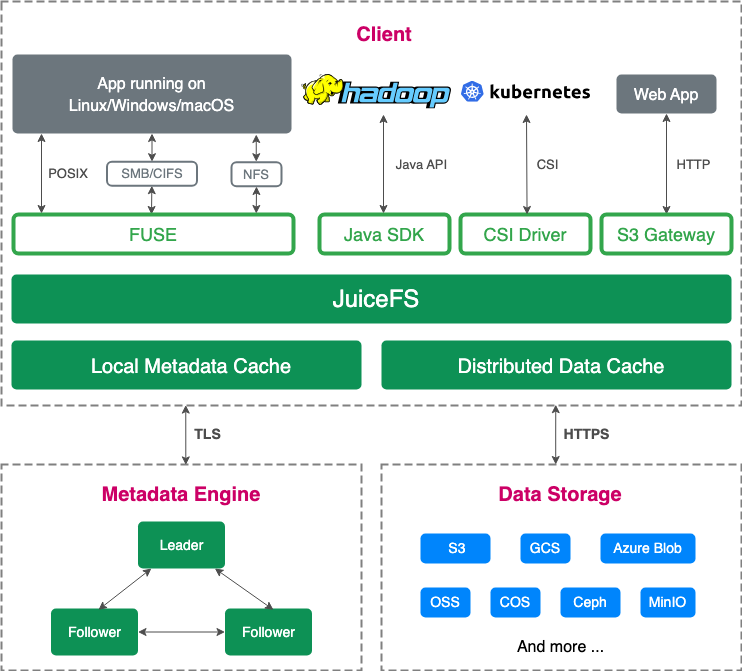

We used JuiceFS Enterprise Edition, which features a proprietary high-performance metadata engine and distributed caching (see: How JuiceFS Transformed Idle Resources into a 70 GB/s Cache Pool).

To achieve optimal performance, we preloaded the entire 35 GB sample dataset into the distributed cache. The figure below shows the deployment architecture:

JuiceFS mount parameter configuration

For cache servers, the mount parameters were set as follows:

juicefs mount jfs /jfs-mem \

--buffer-size=4096 \

--cache-dir=/dev/shm \

--cache-group=cache-mem \

--cache-size=122400 \

--free-space-ratio=0.02

Mount parameters of JuiceFS clients for Windows:

juicefs.exe mount jfs J: --buffer-size=4096 --cache-group=cache-mem --no-sharing --console-url http://console:8080 --enable-kernel-cache

Notes:

--console-url: Specifies the console URL. Required for initial mount or after creating a new file system.--enable-kernel-cache: Enables WinFsp kernel caching.- This is disabled by default, resulting in poor performance when performing large random 512-byte reads.

- Enabling this feature will aggregate requests into 4 KB chunks.

Test results

With data stored in JuiceFS, the time required for pre-rendering storage read tasks across 100 to 3,000 remote clients for Windows is shown in the table below:

| Scale (nodes) | Completion time | Throughput | App IOPS | Metadata server requests (per sec) | Metadata client IOPS | Distributed cache IOPS | Distributed cache throughput |

|---|---|---|---|---|---|---|---|

| 100 | 10~17 min | 1.15 GB/s | 1.33M | 55K | 2.25M | 107K | 1.05 GB/s |

| 200 | 11~20 min | 1.74 GB/s | 2.05M | 85.7K | 3.46M | 177K | 1.66 GB/s |

| 300 | 11~18 min | 5.23 GB/s | 5.27M | 240K | 9.98M | 461K | 4.87 GB/s |

| 500 | 12~25 min | 6.86 GB/s | 7.65M | 1.17M | 17.5M | 532K | 6.33 GB/s |

| 750 | 13~27 min | 10.1 GB/s | 10.9M | 5.81M | 20.6M | 732K | 7.33 GB/s |

| 1,000 | 13~31 min | 12.1 GB/s | 14.9M | 6.92M | 28.6M | 877K | 10.33 GB/s |

| 3,000 (metadata and cache nodes scaled up) | 12~34 min (avg: 22 min) | 23.8 GB/s | 15.1M | 1.27M | 12.7M | 1.5M | 14.7 GB/s |

From the data above, we observe that:

- JuiceFS maintains high efficiency in pre-rendering storage tasks at all tested scales. As client devices increase, the system demonstrates throughput and IOPS improvements.

- At 3,000 clients, JuiceFS reliably handles:

- 1.27 million metadata requests per second

- 14.7 GB/s distributed cache throughput

- 23.8 GB/s aggregated client throughput

- Average task completion time: 22 minutes 22 seconds

Conclusion

This test scenario was designed based on real-world rendering environments but with significantly higher load and performance requirements. It aimed to validate JuiceFS' data processing capabilities in rendering workloads. The test covered the overall capabilities of JuiceFS, including the client for Windows, metadata server software, and distributed cache cluster software.

The test results showed that JuiceFS was able to support at least 3,000 Windows devices simultaneously performing pre-rendering storage-related read tasks, with an average completion time of 22 minutes and 22 seconds, and a maximum task completion time of less than 34 minutes. This demonstrates that JuiceFS can effectively meet the storage needs of real-world applications in most rendering scenarios.

If you have any questions for this article, feel free to join JuiceFS discussions on GitHub and community on Slack.