As we reflect on the journey of the past year, we're thrilled to share that 2025 ushered in the ninth year for JuiceFS Enterprise Edition and a significant fifth anniversary for our open-source Community Edition. Our focus remained unchanged: building a high-performance, easy-to-use file system.

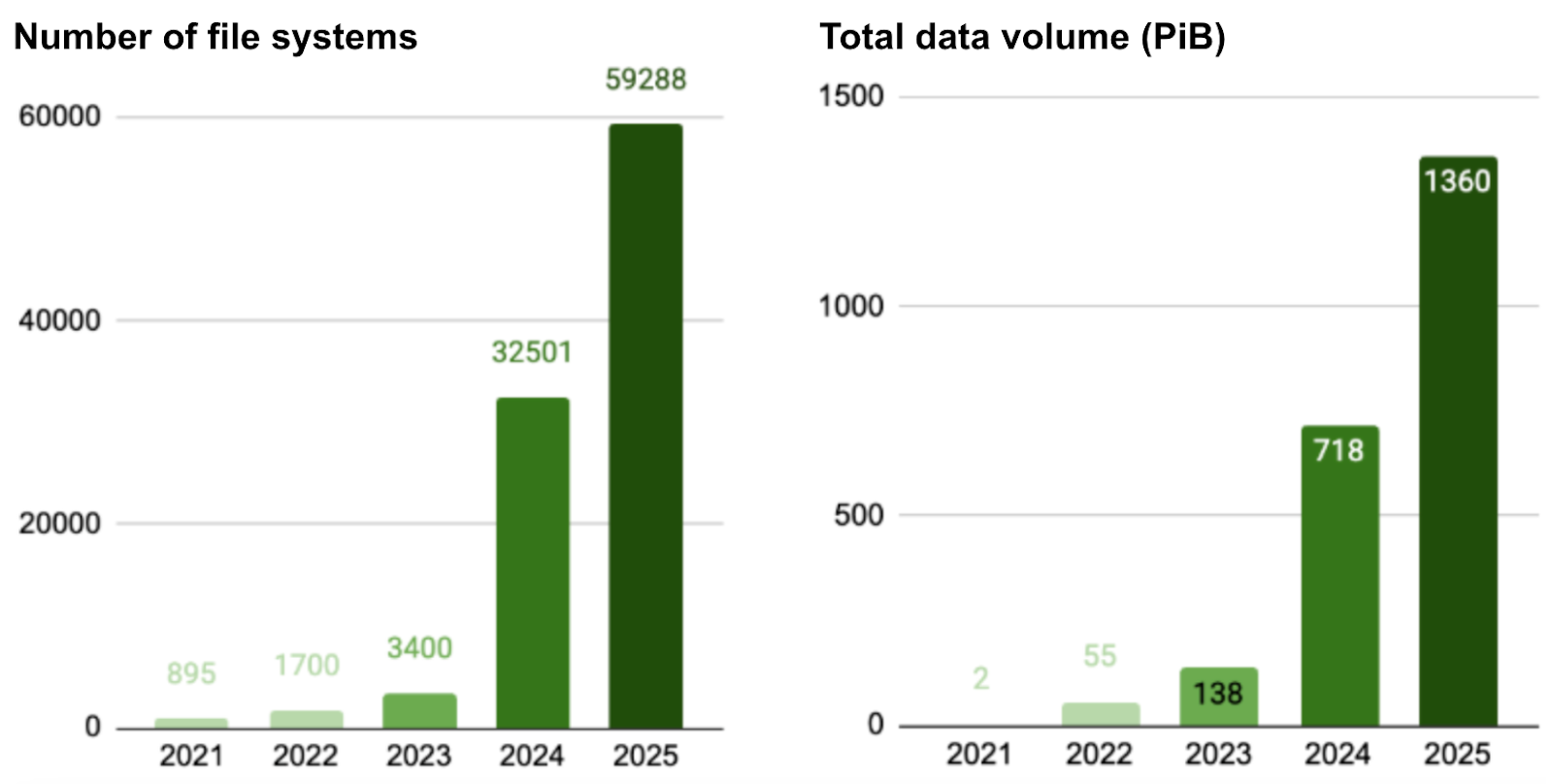

All key metrics continued the growth momentum from the previous year. The data volume managed by the Community Edition grew by 89%, exceeding 1.3 EiB.

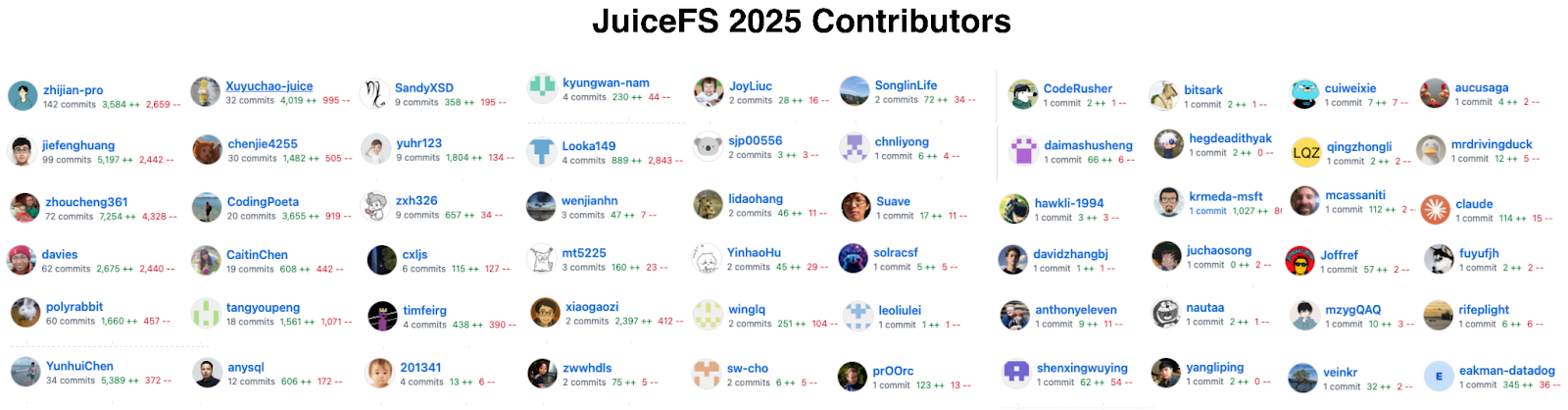

In 2025, the JuiceFS Community Edition continued to prioritize versatility, especially in supporting diverse AI workloads. We released Python SDK, improved Windows client usability, and strengthened integration with cloud-native ecosystems. Metadata engines like SQL databases and TiKV also received targeted optimizations. This year, alongside community contributors, we drove continuous iteration with 60 contributors, 305 new issues, and 601 merged pull requests (PRs).

During the development of the Enterprise Edition, our greatest challenge this year was managing hyperscale data. As AI technologies like autonomous driving become integrated into daily life, data volume growth is unprecedented. Managing hundreds of billions of files introduces exponentially increasing complexity in metadata management and data consistency. To tackle these challenges, the Enterprise Edition underwent comprehensive upgrades in core features like metadata partitioning and network performance.

JuiceFS Enterprise Edition 5.2, released in the first half of the year, already supports a single volume at the hundred-billion-file scale. The upcoming 5.3 version will push this limit to 500 billion files. This allows users to no longer worry about data scale, with JuiceFS' performance and stability providing solid assurance.

Let’s take a closer look at JuiceFS’ achievements in 2025.

Community Edition: Python SDK support and Windows client improvements

Since its open-source release, JuiceFS has been extensively validated in enterprise production environments, with its core features becoming increasingly stable. We released 9 versions throughout the year, with version 1.3 being the fourth major release since its 2021 open-source debut and designated as a long-term support (LTS) version. Key optimizations in this version include:

- Python SDK Support, enhancing flexibility and performance in AI and data science scenarios.

- Windows client optimizations, improving tool support and system service mounting capabilities.

- Backup mechanism enhancement, enabling minute-level backups for 100 million files.

- Integration with Apache Ranger, allowing JuiceFS to support fine-grained permission management in big data scenarios.

- Performance improvements for SQL and TiKV metadata engines, delivering more efficient performance in hyperscale scenarios.

In the second half of the year, we began preparing for version 1.4. Planned new features include:

- Support for user and group quotas

- Redis client caching

- Least recently used (LRU) cache support

- SMB/CIFS support

- Hadoop Kerberos support

- S3 Gateway optimizations

- Resumable

synctool transfers - Support for commercial data encryption algorithms

- Readahead strategy optimization

- Batch deletion improvements

- Related tool optimizations

All aimed at further boosting system performance and stability.

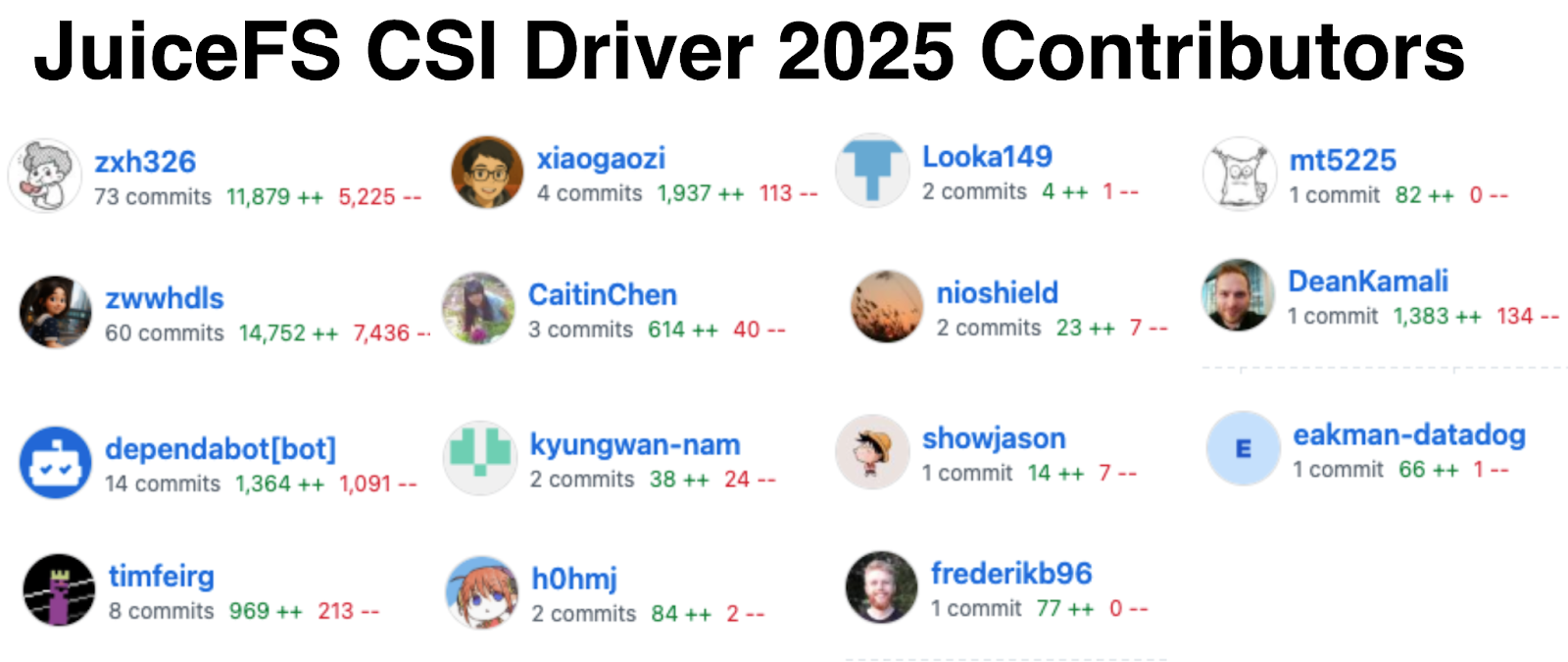

JuiceFS CSI Driver released 18 versions over the past year, continuously optimizing JuiceFS' storage efficiency and stability in environments like Kubernetes. New features include volume path status detection, shared Mount Pods for the same file system, support for native Kubernetes Sidecar, and Dashboard cache group management. In addition, we made performance and reliability optimizations, not only improving stability but also enhancing compatibility with multi-pod configurations and containerized applications.

JuiceFS Operator added a scheduled cache warm-up feature to improve performance for application data access. It now supports cache groups deployed by replica, achieving cache high availability. It also introduced a Sync feature for efficient data synchronization within Kubernetes environments, ensuring consistency.

Enterprise Edition: Single-volume hundred-billion-file scale with robust performance and stability

In the first half of 2025, JuiceFS Enterprise Edition 5.2 was released, breaking through the hundred-billion-file scale for a single file system and significantly enhancing stability for hyperscale clusters and the network performance of distributed caching. To achieve this, we spent a lot of time and effort, particularly in optimizing performance when handling massive datasets and high-concurrency access. This version has been validated in production environments across several enterprises, maintaining metadata latency at the 1-millisecond level even at the single-volume hundred-billion-file scale.

At the same time, we optimized distributed cache network performance, greatly reducing CPU overhead in TCP networks while improving network bandwidth utilization. In a test environment with 100 GCP 100Gbps nodes, aggregate read bandwidth reached 1.2 TB/s, close to full utilization of TCP/IP network bandwidth.

Furthermore, Python SDK achieved fsspec compatibility and on-demand import of object storage files, enabling easier access to existing data in object storage. This resolves read amplification issues in specific scenarios and enhances global QoS capabilities, thereby increasing system flexibility and performance.

The multi-zone architecture is a key technology enabling JuiceFS to handle hundreds of billions of files, ensuring high scalability and high-concurrency processing capabilities. In the second half of the year, we focused on developing version 5.3, which delivered comprehensive optimizations to this architecture. The zone limit was increased from 256 to 1,024, enabling a single volume to support the storage and access demands of over 500 billion files.

This achievement involved a series of complex tasks:

- Systematically refining cross-zone link implementations and establishing a background self-check mechanism to improve cluster reliability and stability

- Developing hotspot monitoring and automatic migration tools for efficient hotspot handling

- Optimizing distributed cache management to reduce cache conflicts and improve concurrent performance

To further enhance distributed network performance, this version introduced RDMA technology for the first time (currently in experimental phase). Initial tests show it outperforms the TCP protocol in terms of stability and CPU usage. Version 5.3 is scheduled for release in January, 2026. Stay tuned for more details.

Community growth: Rapid expansion, total data volume exceeding 1.3 EiB

Currently, JuiceFS has got 12.6k+ GitHub stars. Our downloads have surpassed 50,000, while JuiceFS CSI Driver downloads have exceeded 5 million.The Slack community has reached about 1,000 members.

The fifth year since the open-source release of the Community Edition marks another year of rapid growth. User-reported data shows continued upward trends across key JuiceFS metrics:

- File systems: 590k+, an 82% increase

- Active clients: 150k+, a 46% increase

- File count: 400+ billion, a 43% increase

- Total data volume: 1.3+ EiB, an 89% increase

This year, we made our mark at industry conferences by participating in events such as KubeCon + CloudNativeCon North America, Open Source Summit Japan, and SNIA Developer Conference (SDC).

To better support our users, we regularly host five sessions of Office Hours to introduce new features, address questions, and help users across various industries confidently deploy JuiceFS in production environments. Use cases span fields including autonomous driving, generative AI, AI infrastructure platforms, quantitative investing, and biopharmaceuticals. (View all case studies)

A special thanks to the users who shared their experiences this year—their practical insights have provided invaluable reference for the community:

- JuiceFS+MinIO: Ariste AI Achieved 3x Faster I/O and Cut Storage Costs by 40%+, by Yutang Gao at Ariste AI

- Zelos Tech Manages Hundreds of Millions of Files for Autonomous Driving with JuiceFS, by Junyu Deng at Zelos Tech

- Why Gaoding Technology Chose JuiceFS for AI Storage in a Multi-Cloud Architecture, by Jia Ke at Gaoding Technology

- StepFun Built an Efficient and Cost-Effective LLM Storage Platform with JuiceFS, by Changxin Miao at StepFun

- INTSIG Built Unified Storage Based on JuiceFS to Support Petabyte-Scale AI Training, by Yifan Tang at INTSIG

- vivo Migrated from GlusterFS to a Distributed File System Built on JuiceFS, by Xiangyang Yu at vivo

- NFS to JuiceFS: Building a Scalable Storage Platform for LLM Training & Inference, by Wei Sun at a leading research institution in China

- BioMap Cut AI Model Storage Costs by 90% Using JuiceFS, by Zedong Zheng at BioMap

- JuiceFS at Trip.com: Managing 10 PB of Data for Stable and Cost-Effective LLM Storage, by Songlin Wu at Trip.com

- How Lepton AI Cut Cloud Storage Costs by 98% for AI Workflows with JuiceFS, by Cong Ding at Lepton AI

- Tongcheng Travel Chose JuiceFS over CephFS to Manage Hundreds of Millions of Files, by Chuanhai Wei at Tongcheng Travel

We've shared a remarkable year together. JuiceFS has evolved from an emerging open source project into a trusted solution powering AI-driven businesses today. We extend our deepest gratitude to every one of you for your active participation and steadfast support—whether through answering questions, sharing real-world experiences, or contributing code to the project.

In the coming year, JuiceFS will continue to deliver a more efficient and seamless experience for your work.